The Infrastructure Reality Behind AI Hype: What the 2026 CNCF Survey Reveals (And What It Doesn't)

The January 2026 CNCF Annual Cloud Native Survey landed this month. Buried beneath the expected headlines about Kubernetes dominance is something far more interesting: a comprehensive map of where enterprise infrastructure investment is actually going, and where it's not.

The survey, based on 628 respondents across multiple industries and geographies, confirms what many of us building infrastructure have observed. The AI conversation has fundamentally shifted from "who can train the best model" to "who can operationalize inference at scale." This shift has profound implications for where value will be created and captured over the next several years.

The Inference Economy Is Here

Let's start with the most striking finding: 52% of organizations don't build or train their own AI models. They're consumers. The remaining organizations that do "train" are predominantly fine-tuning existing models rather than building from scratch.

This shouldn't surprise anyone who's been paying attention, but it should reframe how we think about AI infrastructure investment. The report's authors put it well: "If we cut through the hype of chatbots and agents, we can clearly see that we will need to greatly decrease the difficulty of serving AI workloads while massively increasing the amount of inference capacity available across the industry."

Take a moment and let that sink in. The infrastructure challenge isn't about building bigger GPU clusters for training. It's about the unglamorous work of routing, caching, rate limiting, and managing inference traffic at production scale.

Consider the deployment frequency data: 47% of organizations deploy AI models only occasionally (a few times per year), and just 7% manage daily deployments. The gap between "we have a model" and "we have a production AI system" remains enormous. That gap is an infrastructure problem, not an algorithm problem.

There's also a question the survey doesn't ask. 37% of organizations using managed generative AI APIs are sending inference traffic somewhere. Where? Under what data residency constraints? With what model governance guarantees? As the EU AI Act takes effect and regulated industries face increasing scrutiny over AI decision-making, the sovereignty dimension of inference infrastructure will become harder to ignore.

Kubernetes Won. Now What?

The survey confirms what we've observed in our customer base: 82% of container users now run Kubernetes in production, up from 66% in 2023. The report aptly describes Kubernetes as "boring," and correctly notes that boring is the highest compliment in infrastructure.

But here's the more interesting number: 66% of organizations are using Kubernetes specifically to host their generative AI workloads. Kubernetes has evolved from "container orchestrator" to "AI infrastructure platform." The report notes that projects like Kubeflow provide end-to-end ML workflows while KServe handles model serving at scale.

This convergence creates a clear market reality: whatever you build for AI workloads needs to be Kubernetes-native. Not Kubernetes-compatible. Not Kubernetes-adjacent. Native. The organizations that have achieved "true MLOps maturity" (the 23% running all inference workloads on Kubernetes) have done so by integrating AI into their existing CI/CD pipelines, GitOps workflows, and observability stacks.

Security Hasn't Gone Away. Complexity Has Caught Up.

The survey asked respondents about their top challenges in deploying containers. "Cultural changes with the development team" came in at #1 with 47%, followed by "lack of training" (36%) and "security" (36%).

That security figure deserves attention. In 2023, security was the top challenge. It hasn't become less important. If anything, it's become more critical as AI workloads introduce new attack surfaces. What's changed is that platform engineering complexity has risen to match it as an operational bottleneck.

The report's authors frame the cultural challenge as evidence for Platform Engineering: building standardized platforms with "paved roads, sensible defaults, and clear guardrails." That framing is correct, but incomplete. The cultural resistance the survey identifies isn't irrational. Developers express skepticism that containers add unnecessary complexity for simple applications. Operations teams worry about troubleshooting containerized systems. Management questions whether investments are distracting from feature delivery.

Now, layer AI agents on top of this. The MCP revolution is enabling AI systems to call external tools, access databases, and take autonomous actions. Each of those capabilities is a new permission surface. Each is a new governance challenge. Organizations struggling with basic container deployment complexity aren't ready for agent-to-agent communication patterns where security and access control become exponentially harder to reason about.

The security challenge hasn't diminished. It's compounding. And it's compounding on top of platform engineering foundations that many organizations haven't solidified.

GitOps as the Maturity Marker

The survey segments organizations into four maturity profiles: explorers (8%), adopters (32%), practitioners (34%), and innovators (25%). The progression between these stages correlates strongly with specific technology and practice adoption.

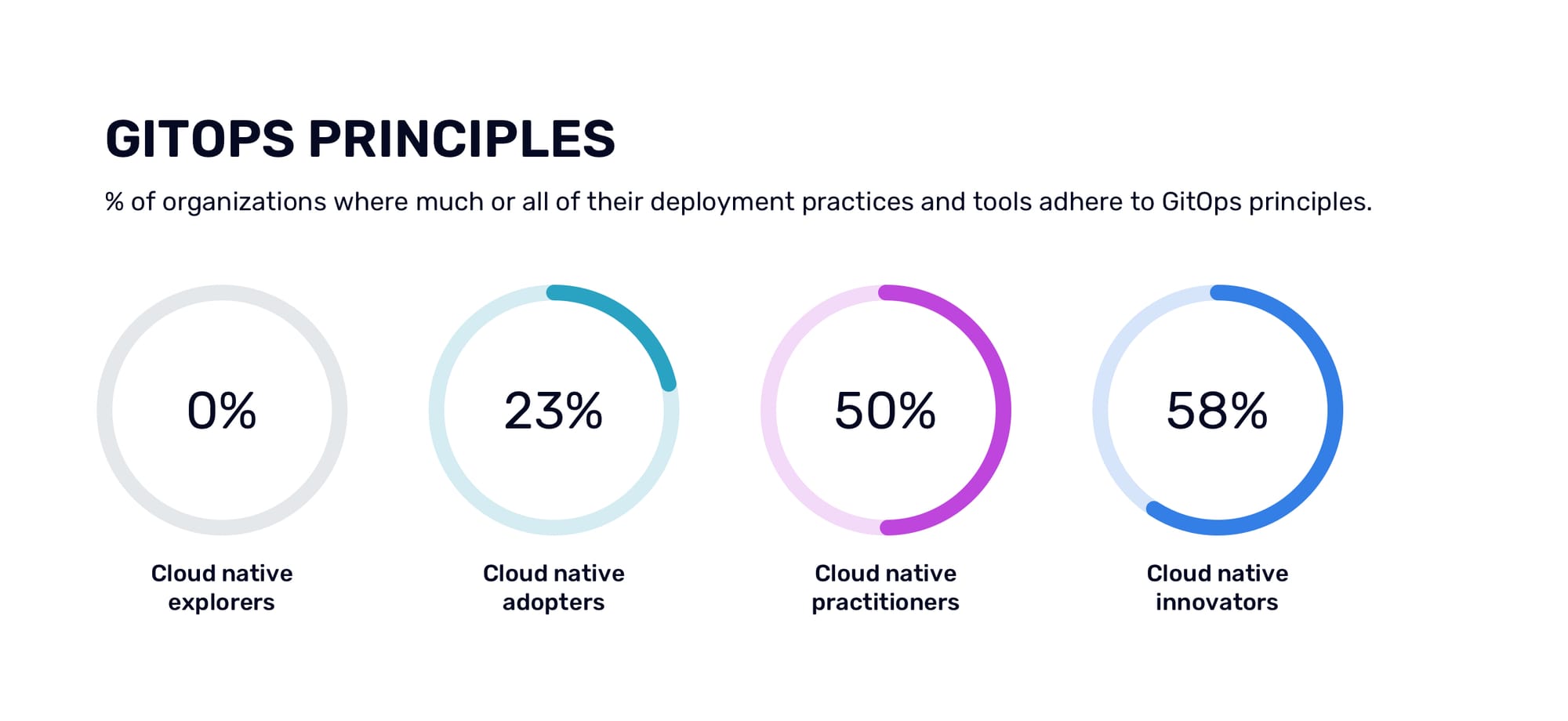

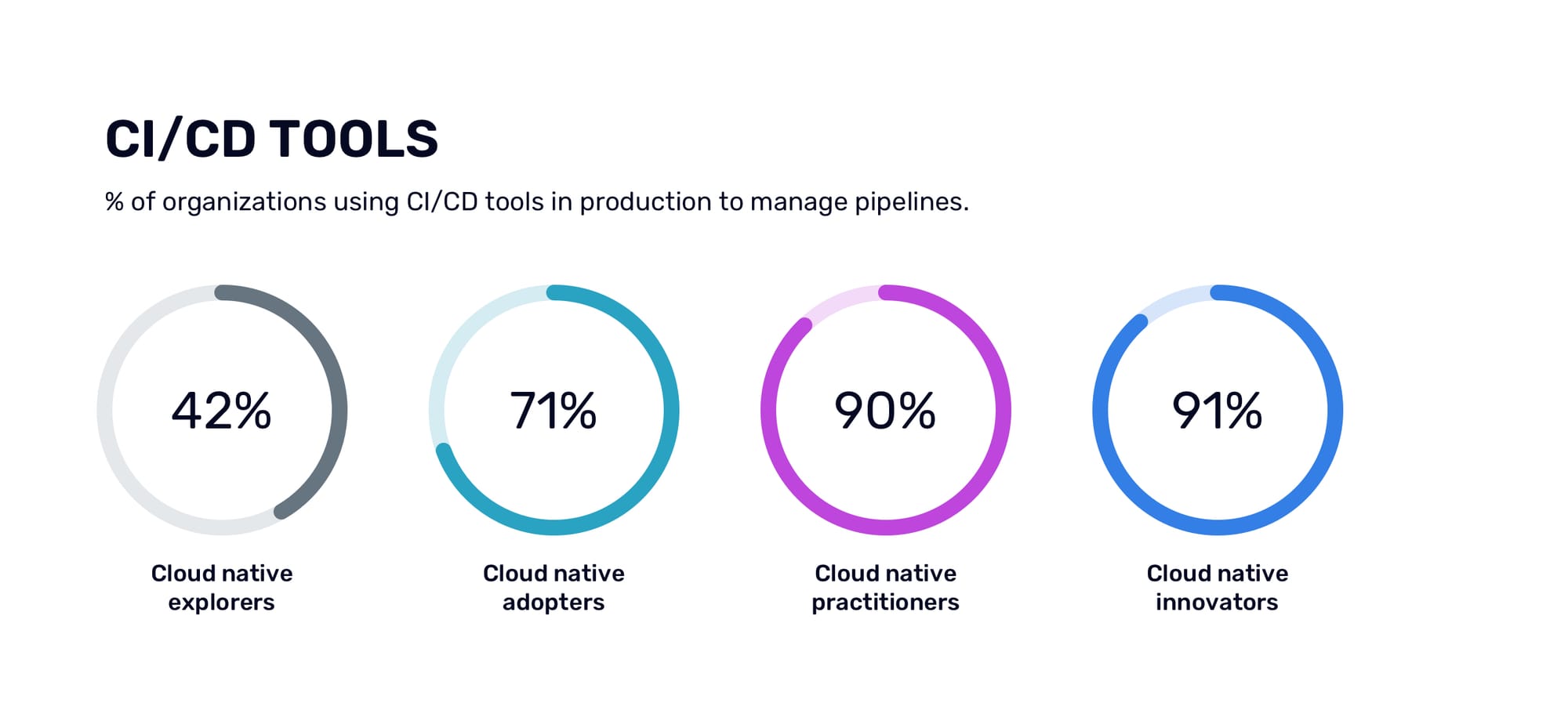

The most telling correlation is GitOps adoption: 0% of explorers have implemented GitOps, while 58% of innovators run GitOps-compliant deployments. CI/CD shows a similar pattern. 42% of explorers use it versus 91% of innovators.

This isn't just correlation. GitOps requires, and forces, a level of operational discipline that defines mature cloud native organizations: declarative configurations, version control for infrastructure, automated synchronization, and clear audit trails. That last point matters enormously for security. You can't secure what you can't audit. You can't audit what isn't versioned. Organizations that can't achieve this discipline will struggle with AI workloads, which require even more rigorous deployment practices and access controls.

The survey also reveals that innovators operate at a fundamentally different velocity. 74% check in code multiple times daily (versus 35% of explorers). 41% run daily releases (versus 12% of explorers). And 59% automate more than 60% of their deployments (versus 6% of explorers).

If your infrastructure layer requires ClickOps, you're building for yesterday's market. And if your security model depends on manual reviews that can't keep pace with automated deployments, you're building a compliance gap that will only widen.

The Sustainability Warning

Buried in the report is a warning that deserves more attention. A September 2025 open letter from open source infrastructure stewards warned that critical systems operate under "a dangerously fragile premise," relying on goodwill rather than sustainable funding models.

The letter specifically calls out AI/ML workloads as drivers of "machine-driven, often wasteful automated usage" that strains open source infrastructure. The report notes that "commercial-scale workloads often run without caching, throttling, or even awareness of the strain they impose."

This should concern anyone building AI infrastructure. The naive approach (more API calls, more requests, more brute-force retry logic) is technically simple and operationally catastrophic. Organizations that build efficiency into their AI infrastructure from day one will have both cost and sustainability advantages.

What This Means for Infrastructure Strategy

The CNCF survey paints a clear picture of where infrastructure investment should flow:

- Inference over training. The majority of organizations are consumers of models, not producers. Infrastructure that optimizes inference through intelligent caching, efficient routing, and scale-to-zero capabilities will capture more value than training-focused solutions.

- Kubernetes-native is table stakes. With 66% of organizations running AI workloads on Kubernetes, solutions that require separate orchestration or bolt-on integrations will face adoption friction.

- Security and simplicity aren't trade-offs. When "cultural changes" and "security" are both top-three challenges, infrastructure that reduces cognitive complexity while strengthening access controls has a real moat. This becomes even more critical as MCP-enabled agents expand the permission surface.

- GitOps compatibility is non-negotiable. The correlation between GitOps adoption and organizational maturity is too strong to ignore. Any infrastructure that requires manual configuration or UI-driven workflows is building for a shrinking market segment. More importantly, GitOps provides the audit trail that security and compliance teams increasingly demand.

- Efficiency matters more than we admit. The sustainability concerns raised in the report aren't just ethical considerations. They're operational realities. Inefficient AI infrastructure is expensive AI infrastructure.

Questions for the Road Ahead

The survey opens as many questions as it answers.

- As organizations move from occasional AI deployments to production-scale inference, how are they governing agentic workflows? The MCP ecosystem is evolving rapidly, but we have no baseline data on adoption patterns or access control approaches.

- Similarly, the Kubernetes-centric view is appropriate for CNCF, but enterprises increasingly manage traffic across VMs, containers, and serverless simultaneously. How are organizations handling that complexity?

- And at the most fundamental level: how is traffic actually flowing through these systems? The survey covers orchestration and observability well, but the routing and governance layer between services remains unexplored.

These aren't criticisms. They're signs of a maturing ecosystem where the interesting questions keep evolving.

The Bottom Line

The CNCF survey confirms that we're past the "should we do AI" phase and deep into the "how do we operationalize AI" phase. The winners won't be determined by who has the best model. Foundation model providers have commoditized that race. The winners will be determined by who can move inference workloads from demo to production at scale, with the governance, security, and reliability that enterprises require.

That's an infrastructure problem. And infrastructure problems are what we solve.