Exploring Containerized Applications: How They Work and Differ from Virtual Machines

Containerization technology has rapidly changed the software landscape and how applications are developed, tested, and run in the cloud.

Although containers are currently dominating the tech industry, containerized technology is still a very complex topic to grasp. This article explores what containerized applications are and how they work, their advantages and challenges, and how they differ from virtual machines.

What are containerized applications?

Containerized applications are packaged with all their necessary components and dependencies, including libraries and frameworks. Containerized apps are isolated in containers and are independent of their environment, infrastructure, and operating systems, making them highly portable. In essence, containers are complete, portable computing environments that can run on any infrastructure and operating system. Another unique characteristic of containerized applications is that multiple containers share the same computational resources making them a more resource-efficient and lightweight alternative to virtual machines (VMs).

How do containerized applications work?

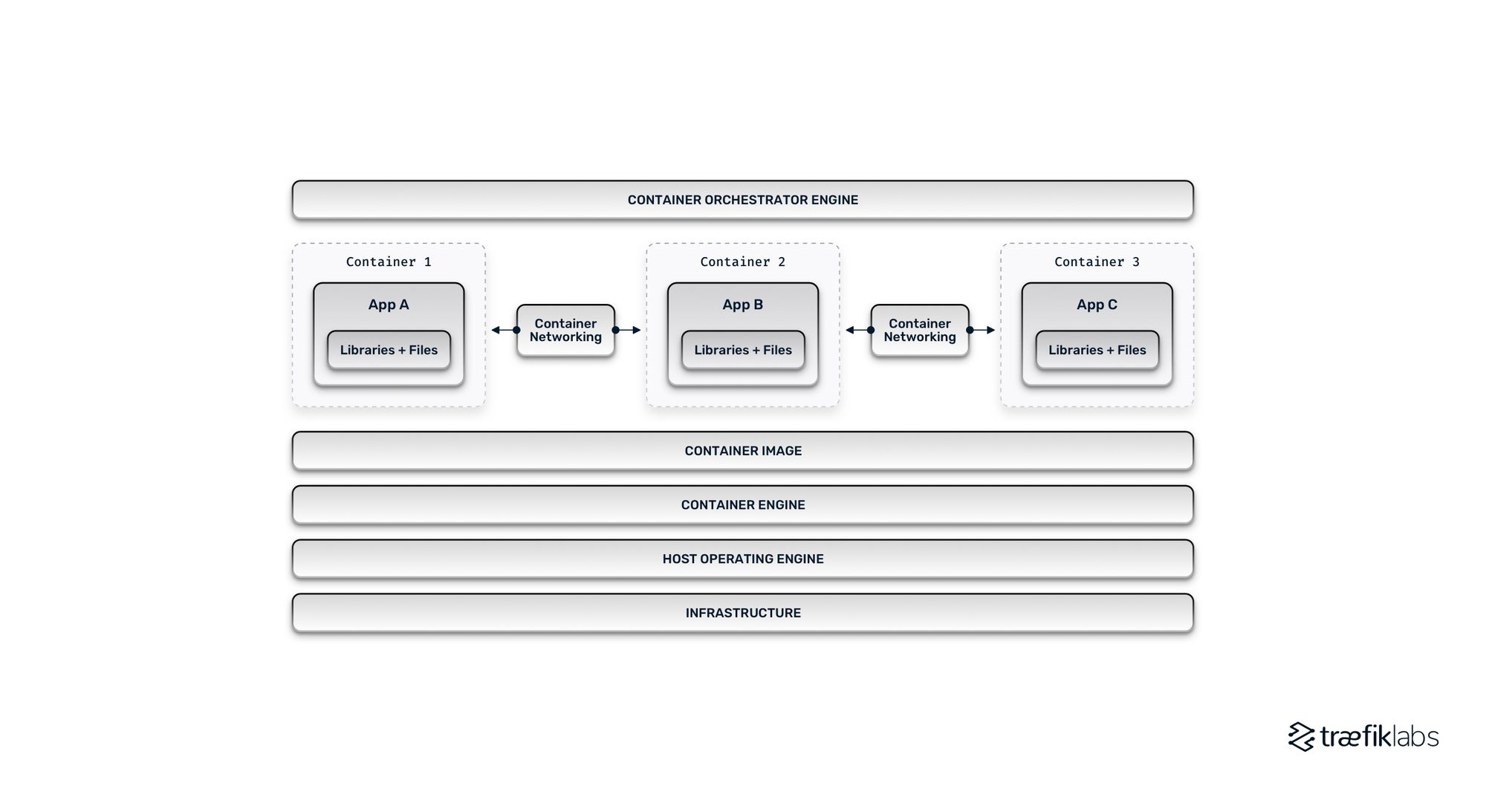

Containerized applications are executable packages of software that run on a host operating system (OS) that can support multiple containers. Containerized apps are part of a multi-layer architecture and depend on several components and tools. Let’s take a look at the most critical pieces.

Infrastructure: At the very bottom of a container environment, there’s the necessary infrastructure hardware that includes the CPU, disk storage, and the network interface card (NIC), which provides the environment with a dedicated, consistent connection to a network.

Operating system: On top of the infrastructure, there’s the host operating system and its kernel, which acts as a bridge and facilitates communication between the OS and the infrastructure hardware.

Container engine: The container engine is the piece of software that accepts user requests for building, running, and managing containers via command-line-based tools and/or graphical user interfaces. The core function of the container engine is the container runtime which creates the standard platform on which applications run. The container runtime also handles the storage needs of containers on the local system. A few examples of popular container engines include Docker Engine, containerd, RKT, and LXD.

Container image: Every container runs processes in isolation from the rest of the environment. However, when a container is not running, it only exists as a container image. The container image includes the source code of the application along with all its files and dependencies. The moment a containerized application starts running, the contents of the container image are copied and launched as a container instance.

Container registry: Container images are generally created using the industry-standard Open Container Initiative (OCI) format that maximizes compatibility and promotes sharing. Images are stored and shared using a public or private repository called a container registry that can be directly connected to container orchestration platforms (see below).

Container networking: Container networking allows communication between containers. While applications within a container can access and modify files and resources within that given container, the networking stack facilitates the communication between multiple containers. The most common container networking tools are service meshes and ingress controllers.

Container orchestration: Sitting at the top of the containerized applications environment, container orchestration refers to the automation of the operational tasks required to deploy, run, and manage containers. Among the most popular container orchestration platforms are Kubernetes, OpenShift, HashiCorp Nomad, Docker Swarm, and Rancher.

Containers vs. virtual machines

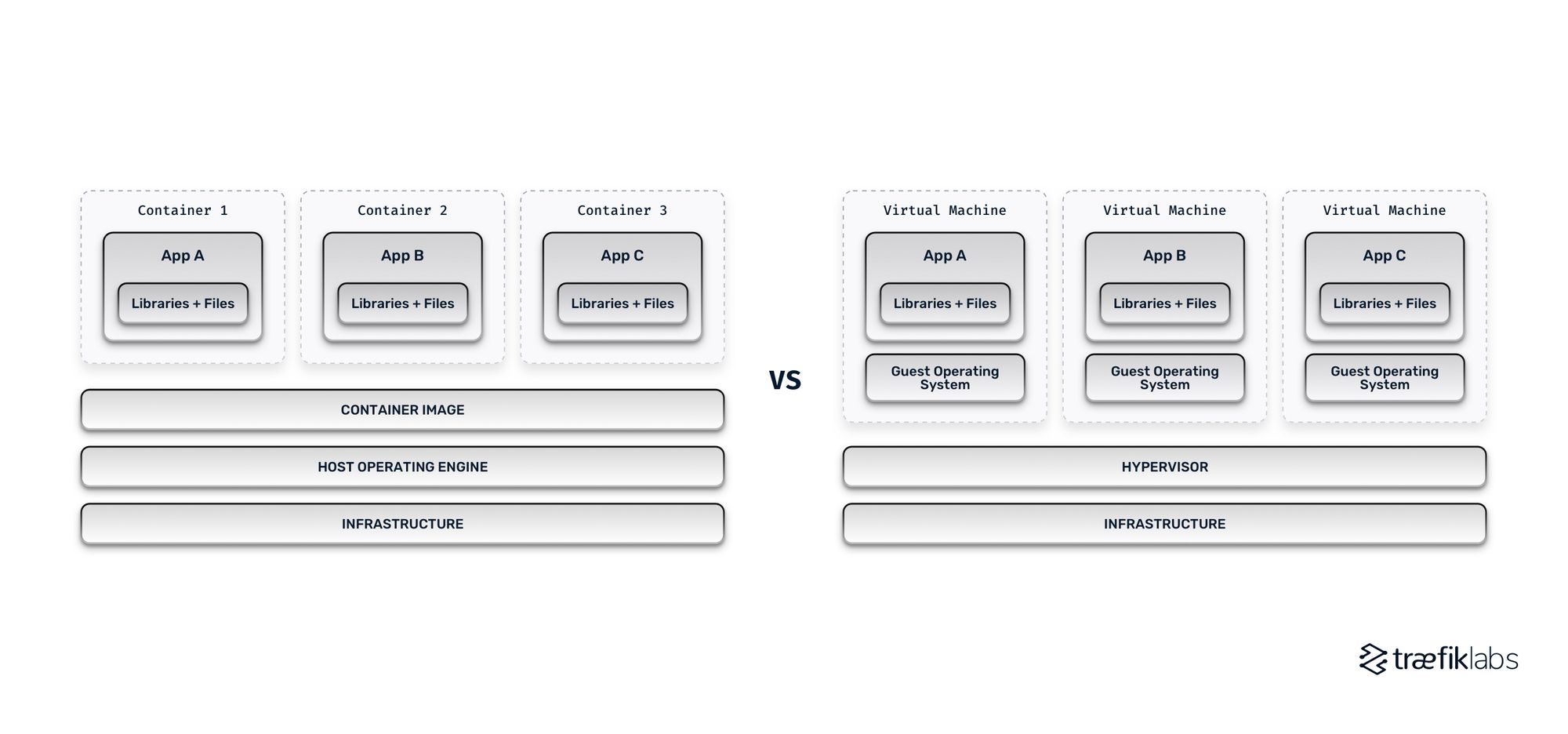

Virtual machines and containers are both forms of application virtualization, but they offer different approaches. When multiple containers run on one host, they share the same OS kernel and the same resources. On the other hand, when multiple VMs run on the same host, they all require their own copy of the OS, alongside their files, libraries, and other dependencies. As a result, containerized applications are significantly more lightweight than VMs — a container image is typically just a few tens of MBs in size, while a virtual machine, including its copy of the OS, the app, libraries, files, and dependencies, adds up to tens of GBs in size.

The counterpoint to containers’ advantage in size and efficiency is security. Although both VMs and containers provide reliable isolated environments, virtual machines offer stronger isolation. With each VM running its own OS copy, virtual machines provide firm security boundaries between each VM, as well as between VMs and the host operating system.

The benefits of containerized applications

Scalability: Containerized applications can be scaled up to meet increasing application load by quickly adding new container instances to handle the load. On the other hand, there’s a limited number of VMs you can add to your environment, and they also take much longer to spin up, especially in an increased load scenario.

Portability: Containerized applications can run anywhere, thanks to container images. Container images are not tied to any host OS and can be used to deploy containerized apps on any platform or cloud.

Quick to create and deploy: Since containerized applications share the same computing resources, configuration settings and allocating resources between containers are no longer needed, saving you time and effort.

Lightweight: Containers do not require their own copy of the operating system. With only their necessary dependencies included, containerized applications are only a few tens of MBs in size, making them extremely lightweight and fast to spin up.

Reduced costs: Running multiple containers does not require each container to include a full copy of the operating system, contrary to running multiple VMs. This approach reduces overhead, which translates not only to faster spin-up times and better overall performance but also reduces server and licensing costs.

Fault isolation: All containers in a containerized application environment run independently and are isolated from each other. A failure occurring in one container does not affect other containers. This way, organizations can identify and fix issues in the affected container with no downtime or any effect on the other containers.

The challenges of containerized applications

Complexity: With the increased adoption of containers, organizations run an increased number of containers across multiple systems and environments. The ease of quickly spinning up containers adds up to the level of complexity, as it becomes increasingly difficult for teams to track and manage their containerized applications properly.

Security: While containers are isolated from each other, they lack isolation from the core operating system. Vulnerabilities in the host OS can potentially impact all the containers in this environment. The speed with which containers are spun up also adds security risks as undetected misconfigurations or bugs can be exploited.

Potential to increase costs: The benefit of quickly setting up and deploying containers can also become a drawback regarding potential costs. Arbitrarily rolling out containers and neglecting to shut them down when they are not needed, results in unnecessary costs as these unused containers keep using server resources.

Common use cases for containerized applications

Microservices: Contrary to monolithic applications, microservices consist of many different and independent components that are deployed within a container. Each container includes a small part of the application, and they all work together as one cohesive app.

DevOps: DevOps teams can take advantage of various benefits of containerized applications architecture. With the ability to quickly create lightweight application environments, DevOps teams are much more agile with their application building, testing, and deploying processes.

CI/CD: Containerized applications allow organizations to quickly and easily test multiple applications simultaneously, speeding up their Continuous Integration/Continuous Delivery (CI/CD) pipeline.

Getting started with containerized applications

Starting your containerization journey can seem scary due to the complexity of the containerization technology itself, as well as the vast and expanding ecosystem of tools and services. To help you and give you a head start, here’s a comprehensive (but not exhaustive) list of the essential tools you’ll need to start containerizing applications:

Container runtimes: To kick things off, you’ll need a container runtime. The options are numerous depending on the needs of your application, so make sure you do your due diligence and audit options carefully to pick the right one for your application. There are three types of container runtimes — low-level (e.g. runC, crun, containerd), high-level (e.g. Docker, CRI-O, Windows Containers), and sandboxed (e.g. gVisor containers, Nabla Containers).

Container registries: A container registry is used both as a collection of repositories and a searchable catalog for managing and deploying images. Among the most popular container registries are Docker Hub, GitHub Packages, and Red Hat Quay. The risk of vendor lock-in is high when choosing a container registry, so make sure to audit your options carefully and pick the runtime that best serves the needs of your application.

Container orchestration platforms: To effectively and efficiently run and support containers in production, you need a container orchestration platform. The most popular container orchestration platform is by far Kubernetes, which has been dominating the container ecosystem for years. Other popular options, as seen earlier, include OpenShift, HashiCorp Nomad, Docker Swarm, and Rancher.

Hosting services: To make your containerized applications accessible to public users, you will need a hosting service. Several vendors offer container hosting, with the most popular being Microsoft Azure, Google Cloud, and Amazon Web Services (AWS). Other options include Heroku, Gatsby Cloud, and Netlify.

Container publishing services: Publishing containerized applications in a secure way can indeed become quite complicated. That’s why container publishing tools like Traefik Hub are quite handy. Traefik Hub is a cloud native networking platform that helps you publish and secure containers at the edge of your infrastructure instantly. Traefik Hub also provides a gateway to your services running on Kubernetes or other orchestrators. Sign up for a free account and start publishing and securing your containers in a few clicks.

Networking stack: The level of complexity that comes with containerized application architectures cannot be overstated. With the right tools, however, you can minimize the impact this complexity has on your day-to-day operations. A unified cloud native networking solution that brings API management, ingress control, and service mesh can do the trick. Traefik Enterprise eases the complexity of containerized applications for your dev and ops teams across your organization.

References and further reading

- Understanding Multi-Cluster Kubernetes: Architecture, Benefits, and Challenges

- What is a Kubernetes Ingress Controller, and How is it Different from a Kubernetes Ingress?

- Service Mesh Explained: How It Works and When You Need One

- Publish and Secure Your Applications with Traefik Hub

- 3 Types of Container Runtime and the Kubernetes Connection

- Pets vs. Cattle: The Future of Kubernetes in 2022