Combining Ingress Controllers and External Load Balancers with Kubernetes

The great promise of Kubernetes (also known as K8s) is the ability to deploy and scale containerized applications easily. By automating the process of allocating and provisioning compute and storage resources for Pods across nodes, K8s reduces the operational complexity of day-to-day operations. However, orchestrating containers alone doesn’t necessarily help engineers meet the connectivity requirements for users. Specifically, a Kubernetes Deployment configures Pods with private IP addresses and precludes incoming traffic over the network. Outside of Kubernetes, operators are typically familiar with deploying external load balancers, either in cloud or physical data center environments, to route traffic to application instances. However, effectively mapping these operational patterns to k8s requires understanding how Load Balancers work together with the Ingress Controllers in a Kubernetes architecture.

External load balancers and Kubernetes

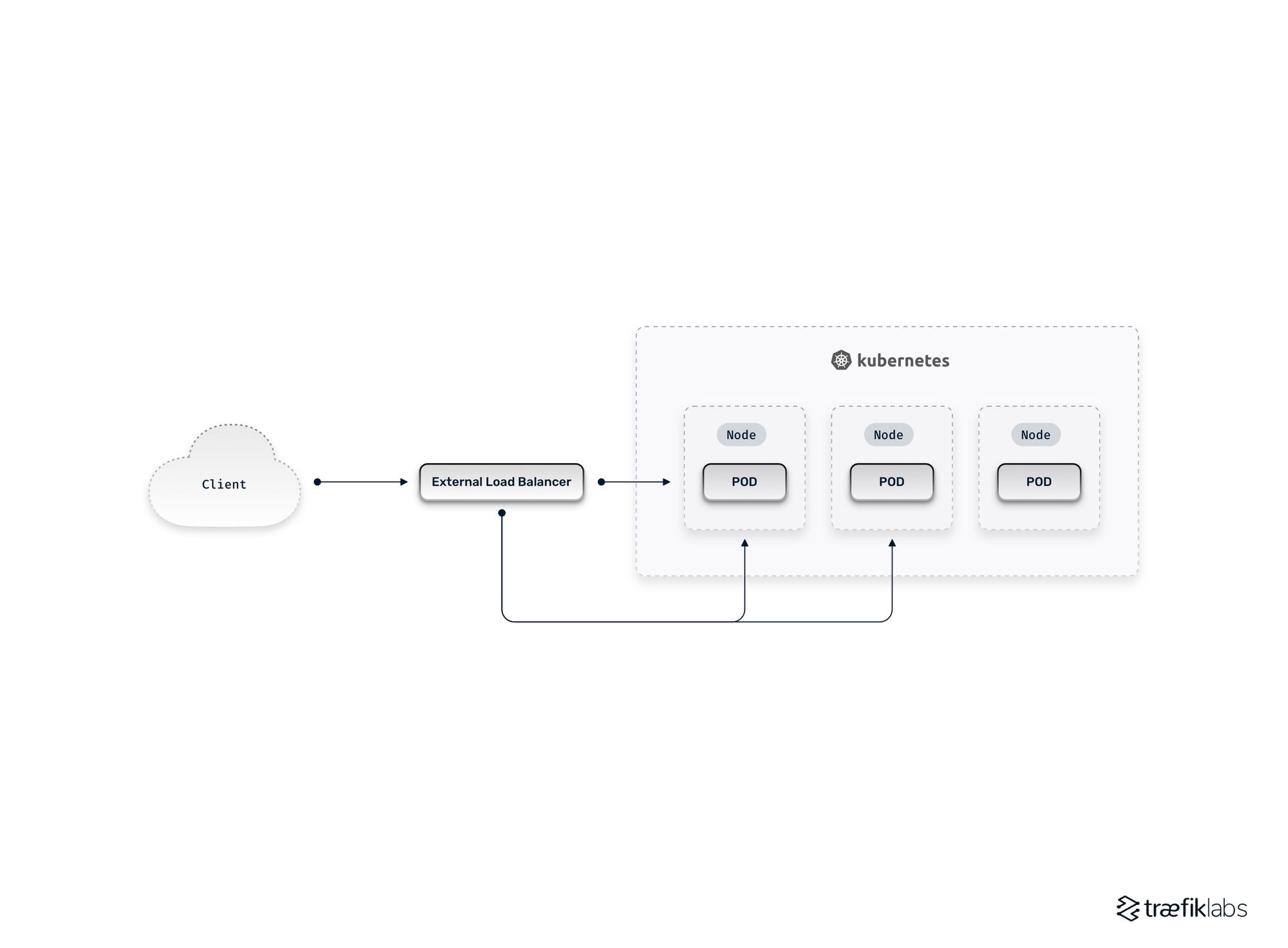

Overview of external LBs and K8s

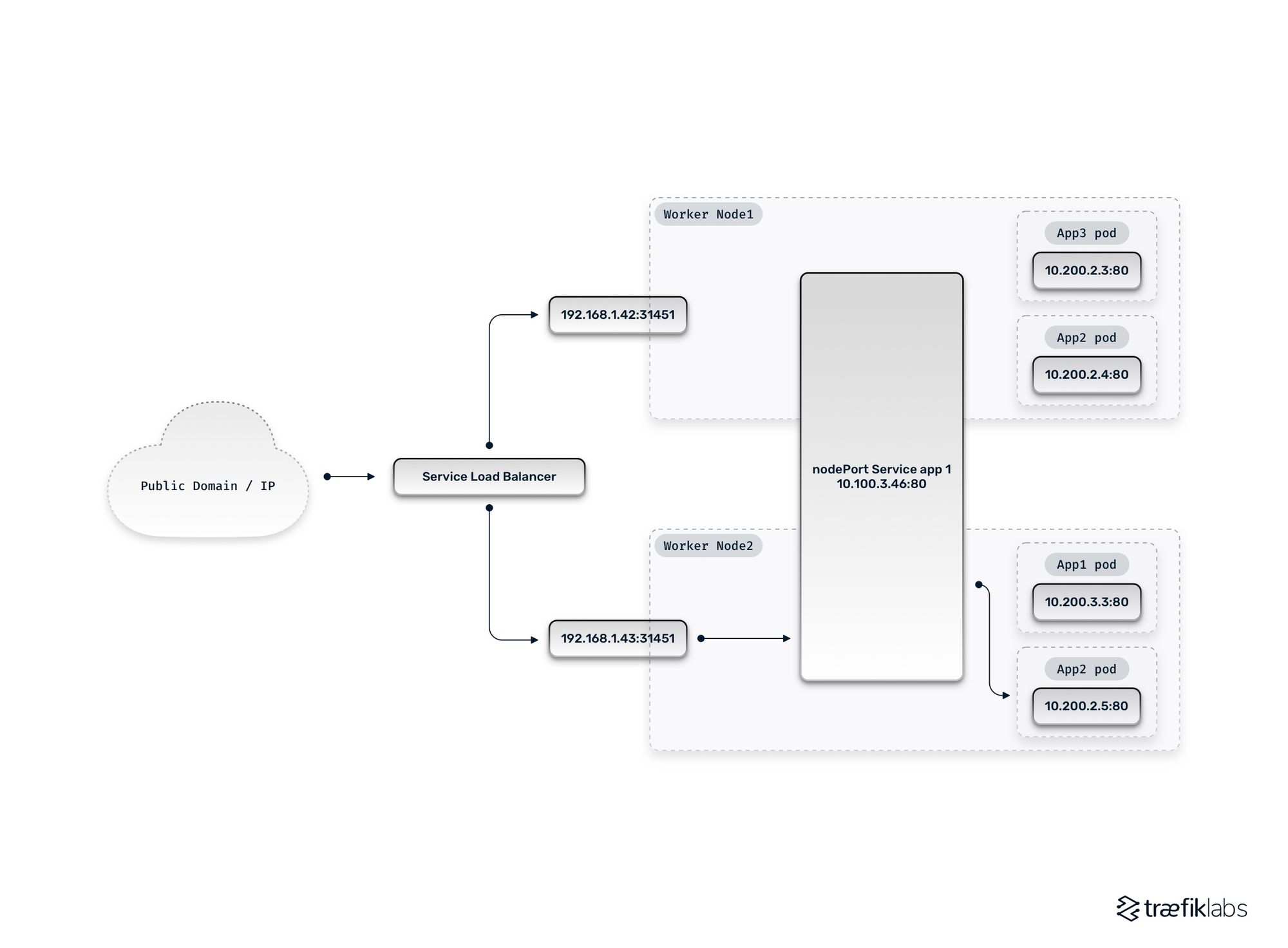

To expose application endpoints, Kubernetes networking allows users to explicitly define Services. K8s then automates provisioning appropriate networking resources based on the service type specified. The NodePort service type exposes an allocated port that can be accessed over the network on each node in the K8s cluster. The LoadBalancer service type uses this same mechanism to deploy and configure an external load balancer (often through a cloud-managed API) which forwards traffic to an application using the NodePort. When a request is routed to the configured port on a node, it forwards packets as needed to direct traffic to the destination Pods using kube-proxy.

Given the ability to easily define LoadBalancer services to route incoming traffic from external clients, the story may seemingly sound complete. However, in any real-life scenario, directly using external load balancers for every application (as seen in the diagram above) that needs external access has significant drawbacks, including:

- Cost overheads: External load balancers, whether cloud-managed or instantiated through physical network appliances on-premises, can be expensive. For K8s clusters with many applications, these costs will quickly add up.

- Operational complexity: Load balancers require various resources (IP addresses, DNS, certificates, etc.) that can be painful to manage, particularly in highly dynamic environments where there may be transient endpoints for staging and development deployments.

- Monitoring and logging: Given the importance of external load balancers in the traffic flow, centralizing monitoring and logging data effectively is critical. However, this may become challenging in practice when there are multiple load balancers involved.

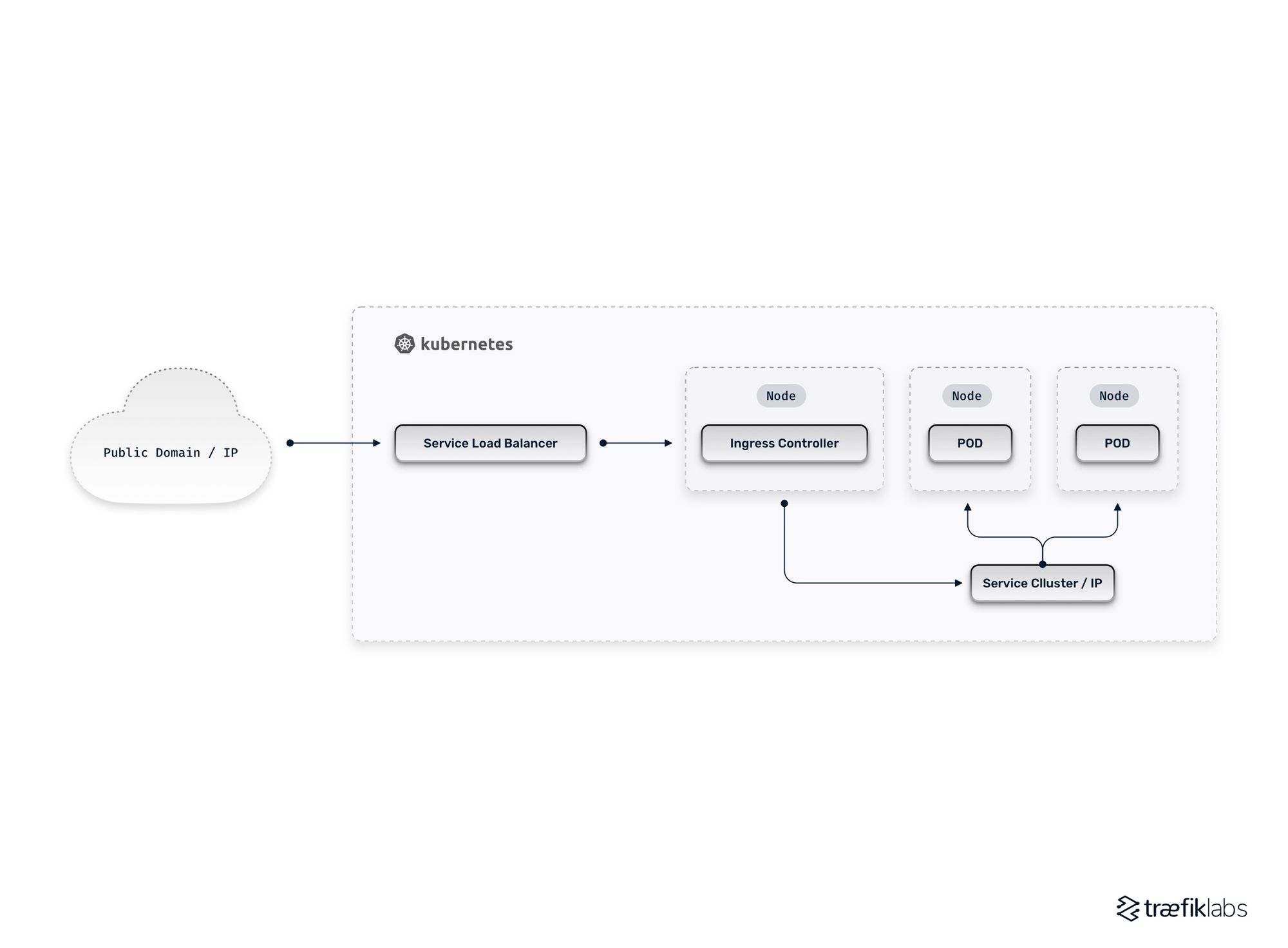

The perfect marriage: Load balancers and Ingress Controllers

External load balancers alone aren’t a practical solution for providing the networking capabilities necessary for a K8s environment. Luckily, the Kubernetes architecture allows users to combine load balancers with an Ingress Controller. The core concepts are as follows: instead of provisioning an external load balancer for every application service that needs external connectivity, users deploy and configure a single load balancer that targets an Ingress Controller. The Ingress Controller serves as a single entrypoint and can then route traffic to multiple applications in the cluster. Key elements of this approach include:

- Ingress Controllers are K8s applications: While they may seem somewhat magical, it’s useful to remember that Ingress Controllers are nothing more than standard Kubernetes applications instantiated via Pods.

- Ingress Controllers are exposed as a service: The K8s application that constitutes an Ingress Controller is exposed through a LoadBalancer service type, thereby mapping it to an external load balancer.

- Ingress Controllers route to underlying applications using ClusterIPs: As an intermediary between the external load balancer and applications, the Ingress Controller uses ClusterIP service types to route and balance traffic across application instances based upon operator-provided configurations.

As shown in the diagram above, injecting the Ingress Controller into the traffic path allows users to gain the benefits of external load balancer capabilities while avoiding the pitfalls of relying upon them exclusively. Indeed, the Kubernetes architecture allows operators to integrate multiple Ingress Controllers, thereby providing a high degree of flexibility to meet specific requirements. This can be a bit overwhelming for those new to Kubernetes networking, so let’s review a few example deployment patterns.

Example deployment patterns

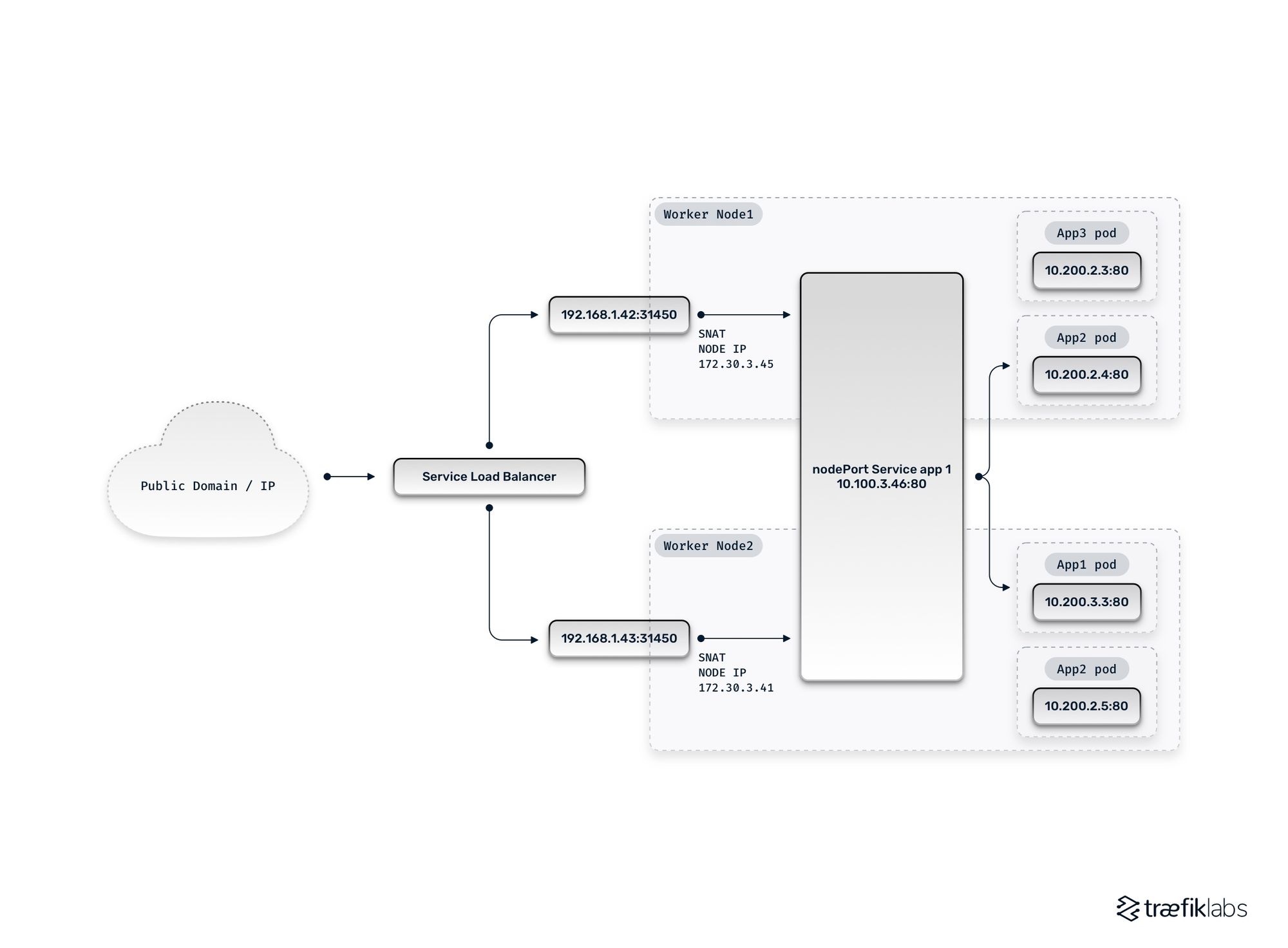

Load balancer with NodePorts and traffic forwarding

As mentioned earlier, a LoadBalancer service type results in an external load balancer that uses NodePorts to reach backend services. Since every node exposes the target port, the load balancer can spread traffic across all of them. However, the underlying Pods may only be running on a subset of the K8s nodes creating the potential need for traffic forwarding. As part of forwarding traffic, the kube-proxy performs source network address translation (SNAT). While this obfuscates the source IP address of requestors, the use of HTTP headers such as X-Forwarded-For (and its standardized variant Forwarded) can be utilized where business requirements require it (e.g. for compliance purposes).

Load balancer with NodePorts and no SNAT

To avoid SNAT in the traffic flow, you can modify the previous deployment pattern to force the external load balancer to only target nodes that reflect Pods running the Ingress Controller deployment. Specifically, the externalTrafficPolicy on the LoadBalancer service for the Ingress Controller can be set to Local instead of the default Cluster value. You can also change the SNI (e.g. Calico) and rely on a fully routed network.

Some potential drawbacks of this pattern, however, include:

- Reduced load-spreading: Since all traffic is funneled to a subset of nodes, there may be traffic imbalances.

- Use of health checks: The external load balancer uses a health check to determine which nodes to target, which can result in transient errors (e.g. due to rolling deployments, health check timeouts, etc.).

Choose your ingress controller wisely

Users can select a technology-specific Ingress Controller based upon desired feature capabilities, and configure Ingress resources for underlying applications accordingly. Examples of potential criteria that may be relevant when comparing candidate controllers include:

- Protocol support: If your needs extend beyond just HTTP(S) and may require routing TCP/UDP or gRPC, it’s important to recognize that not all controller implementations support the full array of protocols.

- Zero-downtime configuration updates: Not all controllers support configuration updates without incurring downtime.

- High availability: If you want to avoid your ingress controller becoming a potential single point of failure (SPOF) for external traffic, you should identify controllers that support high-availability configurations

- Enterprise support: While many open source controller options are available, not all provide enterprise support options that teams can rely on when needed

The ability to easily piece together load balancers and ingress controllers in a manner that meets the unique business needs of an organization exemplifies the benefits that the flexibility of K8s networking can provide.

To learn more, download our white paper that covers modern traffic management strategies for microservices with Kubernetes and how to overcome common “day 2” challenges.