Distributed systems are highly scalable and efficient — but only when integrated into a powerful network. A distributed system can only function if all its applications can communicate effectively with one another. However, this is often easier said than done due to the multi-layered nature of modern architectures.

Modern architectures consist of applications written in various languages with different protocols, and they are spread across multi-cloud and multi-cluster environments. With so many different components in question, middleware makes connectivity possible.

What is middleware?

At its simplest, middleware are pieces of software that live between two different components. Middleware is a contraction of the words ‘middle’ and ‘software’ — it is software in the middle.

The term middleware has been in use since at least 1968, when it was included in a report on a NATO Software Engineering conference that took place in Garmisch-Partenkirchen, Germany. At the time, middleware had a pretty straightforward use case: to exchange data between monolithic applications. Middleware took the form of massive software components that collected data from one application and deliver it to another. Today, we would call this an Extract Transform Load (ETL).

Contemporary information systems are much more heterogeneous, and middleware must take a much more active role. In addition to relaying traffic from one application to another, today’s middleware affects the messages themselves. This is because distributed systems require middleware to enable communication between two components not designed to communicate with one another.

Modern middleware offers the ability to transform data so the messages being relayed can be understood by the receiving application. Middleware also provides functions to secure the network, restricting access to unknown users. It can add or remove data from messages, to fulfill requirements or simplify the network. Finally, middleware can aggregate data from different applications. These are a few ways modern middleware lets you affect the messages passed along a network.

How does middleware work?

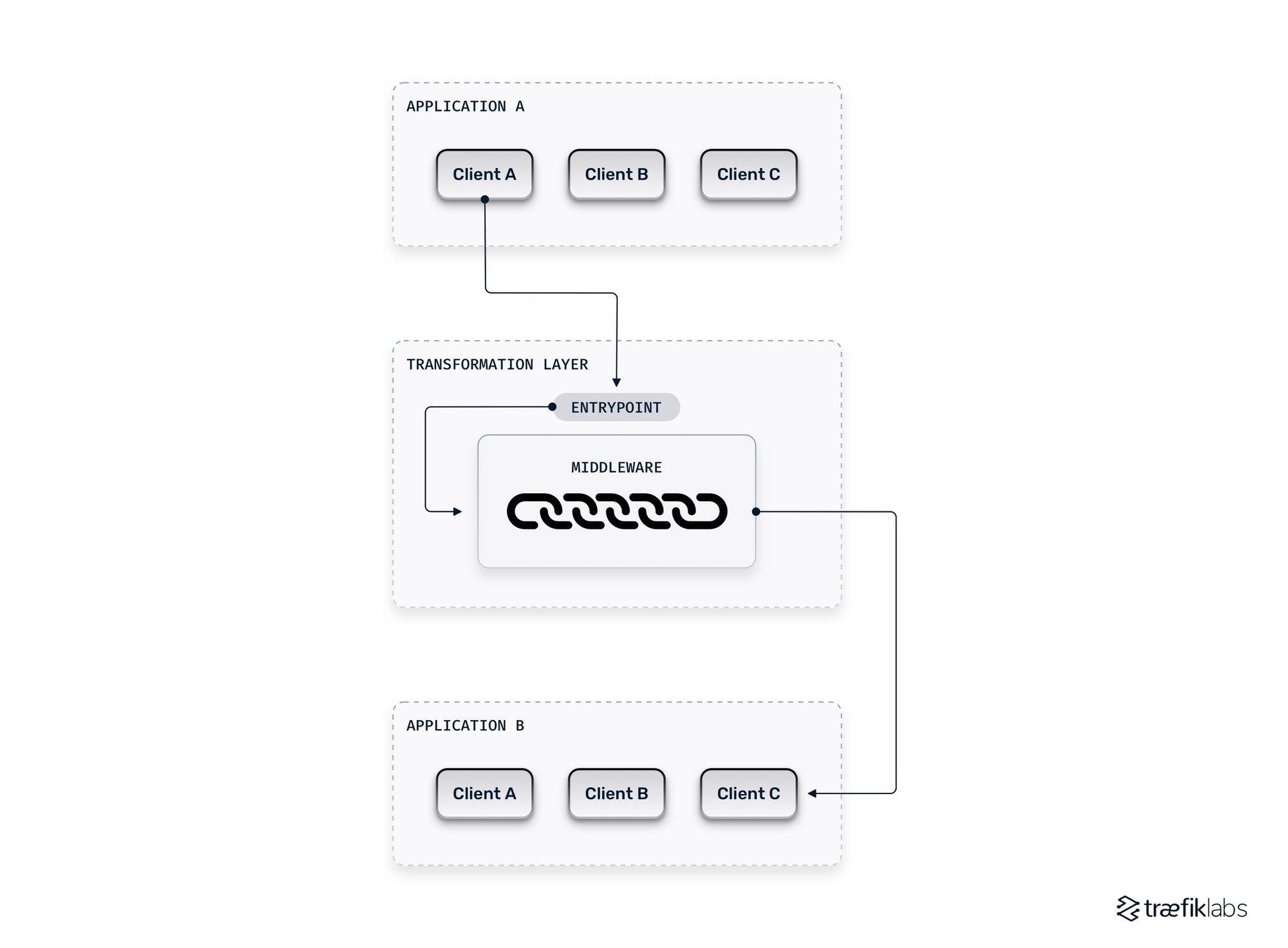

Modern middleware is a transformation layer that sits between two applications. The reverse proxy of one application transmits a message to the entrypoint of the middleware layer. The message then passes through a router before being sent through a chain of middleware that transforms, secures, adds to, or removes from the message. Finally, the message passes through the service of the middleware layer before going to the correct service of the receiving application.

What are some examples of middleware?

Modern middleware often include a rich list of features.

Middleware transforms messages by:

- Translating data from one protocol to another

- Compressing data

- Removing unnecessary information, such as prefixes

Middleware secures the network by:

- Authorizing specific applications to send messages

- Limiting access to an application to specific IPs

Middleware adds to or removes data by:

- Adding content such as client certificates to the header of requests

- Defining rules for which requests will be binned

- Limiting the number of simultaneous, in-flight requests

- Imposing rate limits to make sure services are treated fairly

- Protect systems from stacking requests to unhealthy services

Middleware aggregates data from different applications by:

- Defining reusable combinations of other pieces of middleware

- Combining multiple messages into one when the information is the same

Having this features list in mind, let’s walk through an example scenario to illustrate how middleware might affect data. Imagine you have an Application A that wants to converse with an Application B. Your middleware will collect the message, digest the content, and pass it along to Application B.

Along the way, it will affect the message in several ways. Say Application A uses the REST protocol, but Application B is an SQL database. The middleware will translate the message from HTTP into SQL to be understood by Application B. It will validate the security of Application A, authorizing it to communicate with Application B. It will ensure the message meets the requirements you’ve outlined, such as not exceeding a certain size. It will also add a timestamp to the message, as that is useful to the business.

However, middleware in distributed systems doesn’t just sit between two applications; it relays communication between many more applications. Let’s look at a more complex example.

Rate limiting middleware helps you manage the flow of traffic between applications. It lets you define limits to the number of requests each proxy can receive per unit of time (rate), to treat each service fairly. For example, clients A, C, and D are each able to send x many requests per minute to Application B. Middleware also lets you reject requests once your middleware is full to prevent your infrastructure from failing.

Middleware additionally lets you aggregate data from multiple Services. Say Application B needs someone’s name and date of birth. Application A has the name, and Application C has the date of birth. Middleware will combine the data into one message for Application B. Similarly, if Application B needs the address and telephone number which Application C and D both have, the middleware will combine the data into one message.

When do you need middleware?

You can choose to develop your own middleware. This option is mainly viable if you have legacy applications that don’t rely on standards. You need to write a transformation layer since one isn’t available on a shelf. This does give you the flexibility to configure middleware to suit your legacy applications. However, this is incredibly time-consuming, to the extent that very few organizations pursue this path.

More likely than not, you will invest in an external piece of software. It’s essential to take note that middleware is often called something else. It’s not uncommon to buy software that is middleware. For example, an enterprise service bus (ESB) is a toolbox that bundles several transformation layers between different applications. An ESB is a middleware the same way a car is a vehicle. Either way, any piece of software that lives between applications and acts on the requests themselves is middleware.

If you are building a distributed system (especially one at scale), you will need middleware in one form or another. Your services cannot send messages between themselves without help in the middle. This is especially true if your Services have any diversity (which they almost certainly do). Whether you choose to build your own or invest in a third-party, your choice of middleware will form the backbone of your network.

Building middleware with Traefik Proxy

Traefik Proxy contains a suite of middleware that enables simple and seamless communication between applications. Traefik Enterprise includes an array of advanced middleware for complex deployments at scale.

Built for cloud native scenarios, Traefik Proxy is a reverse proxy that can manage traffic across use cases, including legacy applications in on-prem environments and microservices in the cloud. Traefik Proxy’s middleware supports HTTP, TCP, and soon UDP protocols. It integrates with all major container orchestrators and comes with support for distributed tracing.

Traefik Proxy’s middleware have many functions that include load balancing, API gateway, orchestrator, east-west service communication, and more. An open source tool, Traefik Proxy benefits from a catalog of plugins written by the community.

When building the middleware for your network, you pick and choose the middleware that suits your services to create a chain that can be reused for any service. The middleware combine like building blocks to form a transformation layer that sits between Services. Traefik Proxy allows you to write custom middleware with Yaegi, to benefit from components not available on the shelf. Traefik Proxy empowers DevOps practitioners with the tools to build whatever they want.