How to Keep Your Services Secure With Traefik’s Rate Limiting

The internet can be a challenging environment for running applications.

When you expose a service to the public internet, it's crucial to assess the risks involved. Malicious actors may try to misuse your resources or even bring your service down.

You can't just make your service publicly available without protection and expect things to go smoothly. They won’t. That’s why safeguarding your service is essential. There are many threats to address. Configuring your server properly is a good start, but you also need to protect your application from harmful actions. Our Web Application Firewall (WAF) can assist with that.

However, today we're focusing on a different threat: attempts to overwhelm your resources or disrupt your service with an excessive number of requests. This is where rate limiting comes in.

What is Rate Limiting?

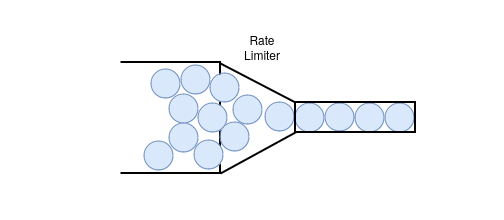

Rate limiting is the process of limiting the flow of requests that reach your servers. Think of it like a funnel, where a large pipe of water narrows into a smaller one, flowing at a much more manageable rate before reaching its destination:

In this analogy, each water molecule represents an HTTP request. A pipe of a certain size limits how many molecules can pass through at once. When the pipe narrows, fewer molecules can flow through, reducing the flow rate.

This illustrates how a rate limiter works to control the flow of requests. Additionally, we can fine-tune the control by limiting traffic based on characteristics like IP address, user, or other request details. You might also want to allow brief bursts of traffic without blocking them entirely.

When discussing rate limiting, the following algorithms are commonly mentioned:

- Token Bucket: The one used by Traefik, which we'll focus on below.

- Leaky Bucket: A first-in, first-out queue that releases traffic at a steady rate. It's less flexible than Token Bucket for handling traffic bursts.

- Generic Cell Rate Algorithm (GCRA): Similar to Leaky Bucket but ensures packets follow a set timing interval instead of draining at a fixed rate.

Two other types of algorithms often come up:

- Sliding Window

- Fixed Window

Unlike rate limiting, which regulates the flow and timing of requests, these two algorithms count the total number of requests within a specific period. They're better suited for enforcing strict usage quotas than managing request flow.

Token Bucket

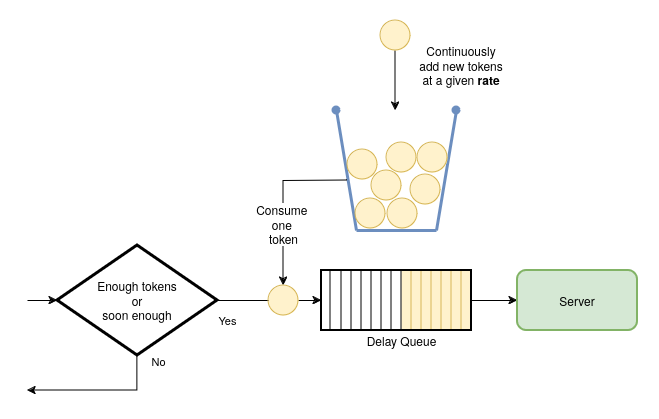

The Token Bucket algorithm controls the flow of requests by using a metaphorical bucket that holds tokens. Tokens are generated at a constant rate and added to the bucket, which has a fixed capacity.

When a request arrives, the system checks if there are enough tokens in the bucket. Each request consumes one token. If enough tokens are available, the request proceeds, and a token is removed. If there aren’t enough tokens, the request is either delayed until more tokens are available or blocked if the delay would be too long.

The bucket can't hold more tokens than its maximum capacity, so once it's full, any new tokens are discarded. This prevents tokens from accumulating indefinitely and allows the system to handle short bursts of high traffic, as long as the bucket has enough capacity.

When the bucket is low or empty, the system slows down, giving time for more tokens to be generated. The token generation rate and the bucket size are key factors that determine how the system performs.

The behavior of the Token Bucket algorithm is determined by two key factors:

- Bucket size: This defines how many requests can be processed simultaneously.

- Rate: This controls how frequently new request opportunities become available as tokens are generated.

Rate Limiting in Traefik

Traefik allows you to define a ratelimit middleware that can be applied to your routers. Assigning this middleware ensures that the flow of incoming requests doesn’t exceed the configured rate.

This middleware uses the Token Bucket algorithm described earlier. The bucket size is controlled by the burst parameter, while the rate is set by the average and period values. The following example configures a rate limiter with a rate of 100 requests per second and a bucket size of 200:

apiVersion: traefik.io/v1alpha1

kind: Middleware

metadata:

name: my-rate-limit

spec:

rateLimit:

average: 100

period: 1s

burst: 200

This configuration means a new request can pass every 10ms. If you receive fewer than 1 request every 10ms, the bucket will fill up, eventually allowing a burst of up to 200 simultaneous requests.

Grouping Requests by Source

Grouping requests by their source and applying rate limiting is an effective way to prevent a small number of clients from overwhelming a system. Instead of limiting the system as a whole, this technique enforces rate limits per individual source, ensuring balanced resource usage.

For example, you can group requests by their IP address and apply rate limits to each IP. This prevents a single IP from sending too many requests, which could degrade the system’s performance. By limiting requests at the source level, the system gains more granular control, preventing any one source from monopolizing resources while still allowing others to access the system at normal rates.

The ratelimit middleware allows requests to be grouped by different sourceCriteria, such as:

ipStrategy: The client’s IP address (default strategy)requestHost: The client’s hostnamerequestHeader: The value of a specific request header

The ipStrategy relies on the de-facto standard X-Forwarded-For header to determine the client’s IP address. When a client connects directly to a server, its IP address is sent to the server. But if a client connection passes through any proxies, the server only sees the final proxy's IP address, which is often of little use. So, to provide a more-useful client IP address to the server, the X-Forwarded-For request header is used. When a proxy receives a request it adds itself to this header.

The X-Forwarded-For header can be a useful tool, but it also poses security risks if not handled properly. Since each proxy in a network chain adds its own IP address to this header, a malicious client could manipulate it by inserting fake IP addresses. This can mislead the server into believing that the request originated from a different location, allowing attackers to potentially spoof their IP address and bypass security measures that rely on client IP verification.

To minimize this risk, it’s essential to only trust proxies under your control or those known to handle requests securely.

In Traefik, this is managed through the --entryPoints.web.forwardedHeaders.trustedIPs setting. If a request originates from an untrusted proxy, the X-Forwarded-For header will be unset. This ensures that only IP addresses from trusted sources are considered, preventing IP spoofing and maintaining the integrity of the real client IP address.

When you are in the situation where you have proxies before Traefik, you will need to configure at least of these options:

depth: Specify from right to left, the nth IP address to use. This is needed to avoid spoofing.excludedIPs: Specify a list of IP addresses to exclude fromX-Forwarded-Forheader.

For example, if the X-Forwarded-For header contains the value 10.0.0.1,11.0.0.1,12.0.0.1,13.0.0.1, a depth of 3 will use 11.0.0.1 as the client's IP address, and a depth of 3 with an excluded IP of 12.0.0.1 will use 10.0.0.1.

For this example, the middleware could be configured like:

apiVersion: traefik.containo.us/v1alpha1

kind: Middleware

metadata:

name: test-ratelimit-with-ip-strategy

spec:

rateLimit:

average: 100

period: 1s

burst: 200

sourceCriterion:

ipStrategy:

depth: 3

Conclusion

Rate limiting is essential to protect your services against overwhelming traffic, but for enterprises, the challenge often goes beyond just managing individual servers. With distributed rate limiting through Traefik Hub, you can scale your traffic management across multiple gateway replicas, ensuring consistent protection and control no matter how distributed your infrastructure becomes.

By leveraging shared buckets across routers and applying limits at both global and granular levels, Traefik Hub helps enterprises maintain fairness, prevent resource monopolization, and optimize performance. This distributed approach offers robust protection against high-traffic events, making it ideal for large-scale operations where traffic spikes and resource demands are common.

While rate limiting is an essential tool in your security arsenal, it should be part of a broader strategy that includes other measures like Web Application Firewalls (WAF), active monitoring, and proper server configuration. By combining these layers, you can create a robust defense system that keeps your services running smoothly and securely in the face of various internet challenges.

As you implement rate limiting in your Traefik setup, consider your specific use case, traffic patterns, and resource constraints to fine-tune the settings for optimal performance. Regular monitoring and adjustments will help ensure that your rate-limiting strategy continues to meet your needs as your service grows and evolves.