Everything You Need to Know About Round Robin Load Balancing

If you ever engage in competitions of any kind, you might be familiar with round robin tournaments. In round robin competitions, each participant takes turns competing with each other participant.

In cloud computing, the principles of round robin are also applied to load balancing traffic coming from an end user to an application’s servers.

What is load balancing?

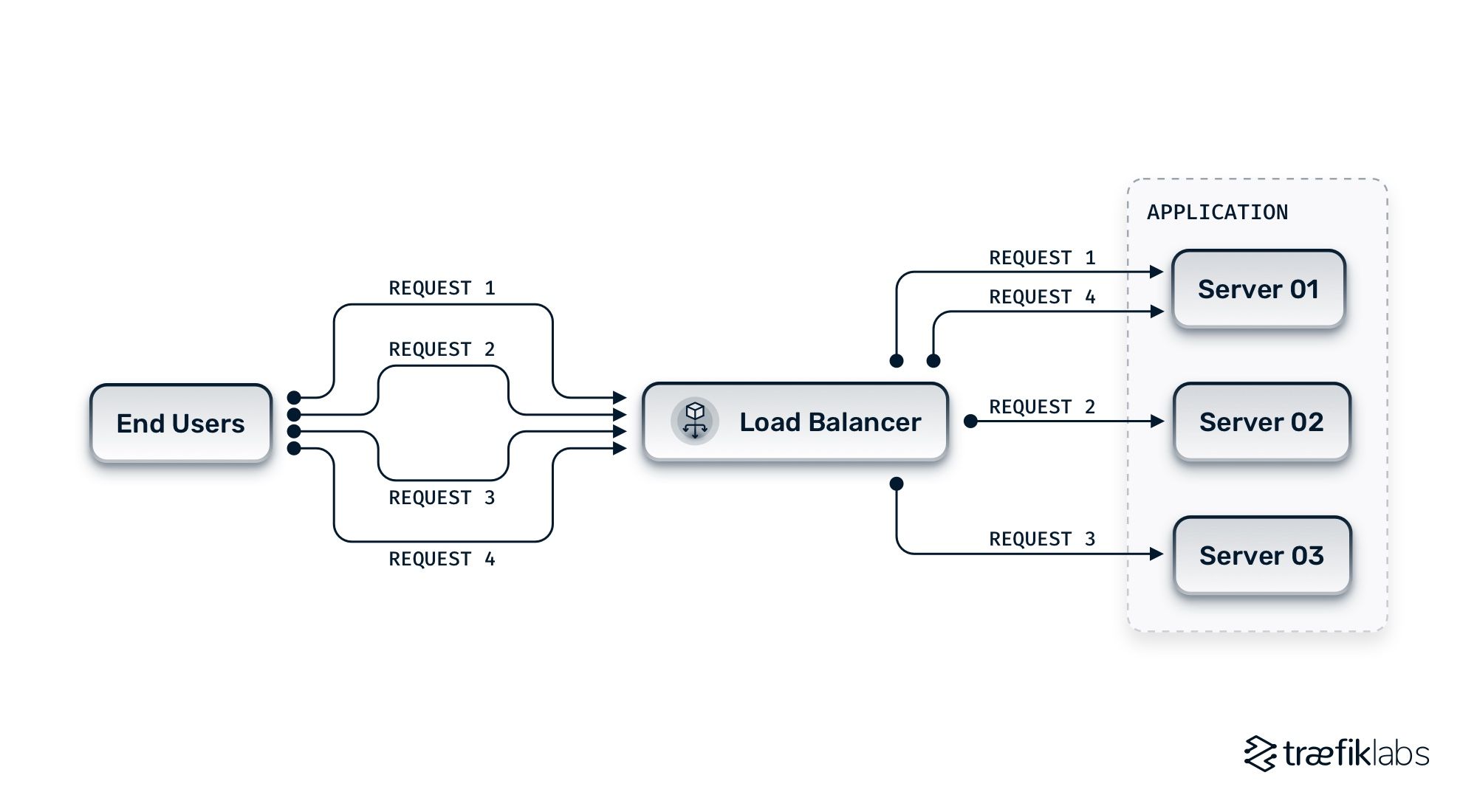

Let’s first discuss load balancing and why it’s so important. Load balancers make sure that each server receives the optimal amount of requests. They prevent information systems from lagging or, in particularly bad cases, failing. They ensure some servers aren’t sent more traffic than they can handle while others receive less. Load balancers are absolutely integral to the successful networking of information systems.

What is round robin load balancing?

Many techniques are used when load balancing traffic, and round robin is a key one. Round robin load balancers rotate the server that traffic is being transmitted to. Traffic will go to Server A before passing to Server B and so on.

While some load balancing techniques regularly perform health checks on servers to find out which ones are at maximum capacity and which ones are not, round robin load balancing sends the same amount of requests to each server. This makes it ideal for information systems with servers that each have the same capacity. At the same time, a round robin load balancer will check up on the server as it transmits traffic to it in order to bypass a given server should it fail.

Round robin is perhaps the most widely deployed technique for load balancing, primarily because of its innate simplicity. As client requests are transmitted to available servers on a cyclical basis, the algorithms required aren’t overly complicated. Nonetheless, more advanced systems of round robin load balancing are available for information systems with more complex needs.

What is weighted round robin load balancing?

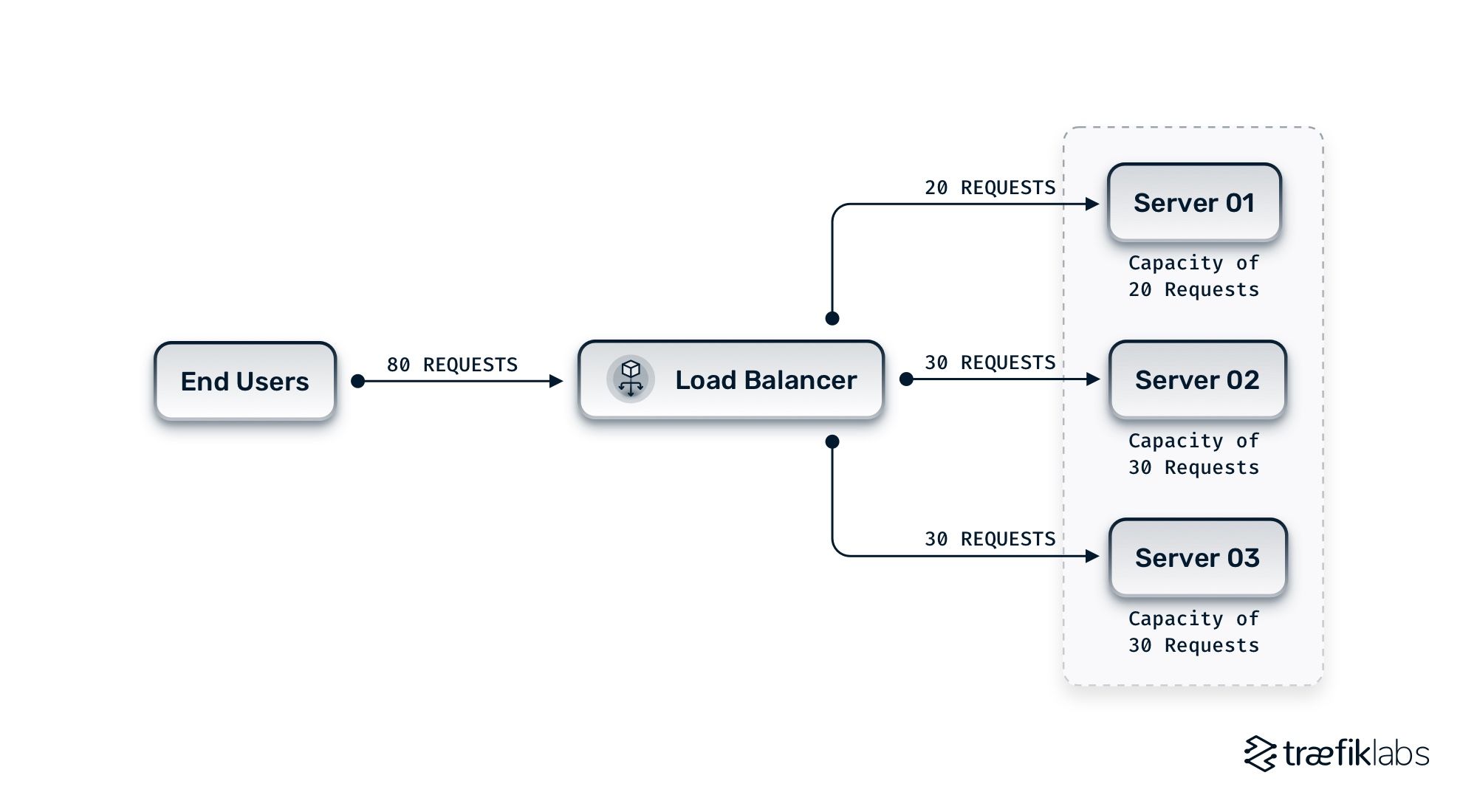

For information systems with application servers that do not match each other in capacity, weighted round robin load balancing is an ideal solution. It prevents servers with smaller capacities from failing while leaving servers with higher capacities from becoming overloaded. This in turn means the system won’t lag.

In weighted round robin load balancing, system administrators can assign weights to each application server based on its capacity. Servers with larger capacities will receive more requests while those smaller capacities will receive fewer requests. For example, if Server A has a capacity of 20 requests, Server B a capacity of 30 requests, and Server C a capacity of 10 requests, the round robin load balancer will send 20 requests to Server A, 30 to Server B, and 10 to Server C. The traffic is evenly distributed based on the capacities of each server.

How to successfully implement round robin load balancing in Kubernetes

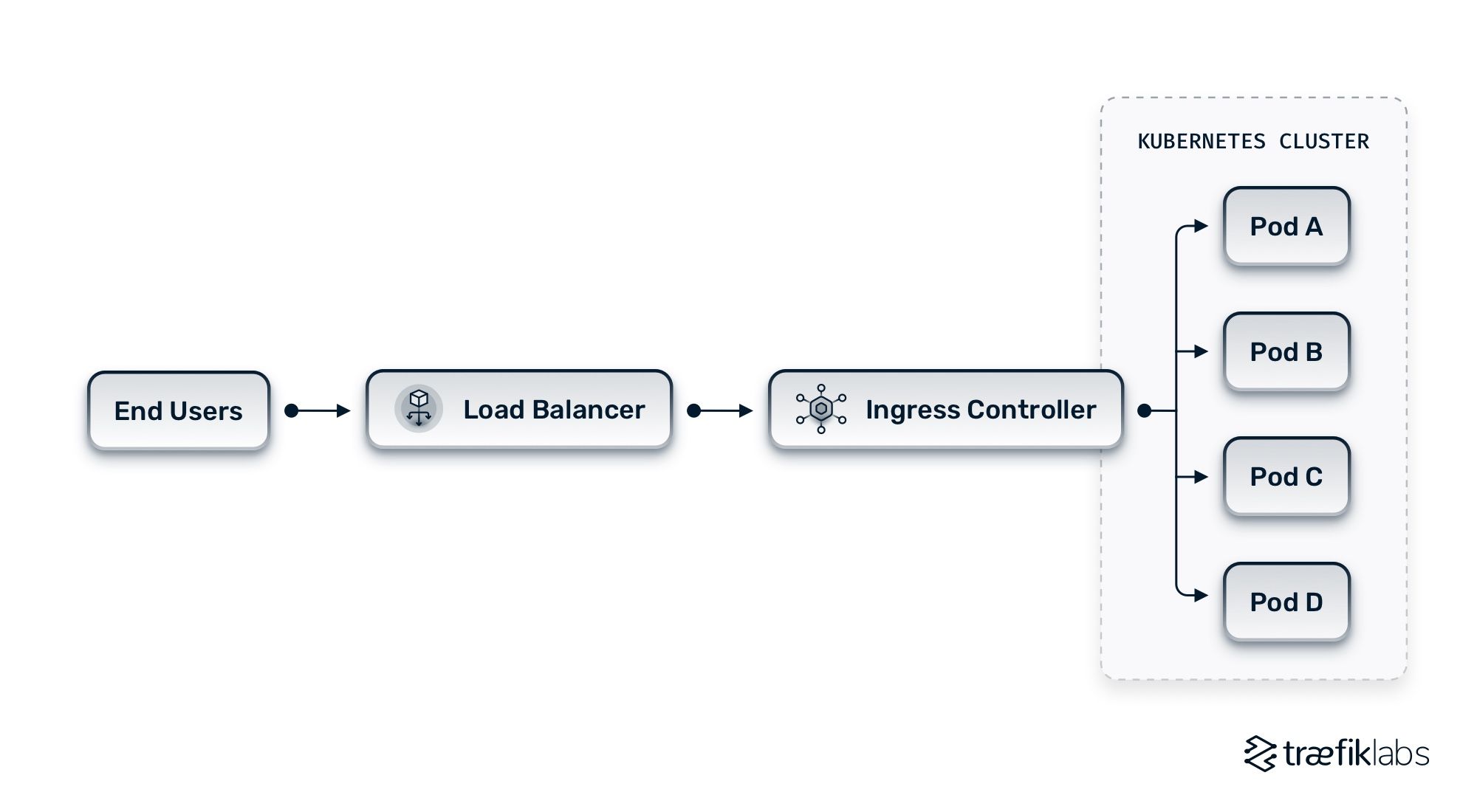

In layer 7, applications running Kubernetes configure load balancing by adding an ingress controller before all clusters. Ingress controllers each offer their own load balancing capabilities and techniques, some of which include round robin and weighted round robin load balancing.

Traefik Enterprise is an example of a high-performing ingress controller that offers round robin and weighted round robin load balancing strategies for directing traffic to back-end clusters. It combines ingress control with an API gateway and service mesh to enable powerful cloud native networking.

Interested in giving Traefik Enterprise a shot? Sign up for a free, 30-day trial or request a demo to get started.