Load Balancing 101: Network vs. Application and Everything You Wanted to Know

Computer networking, the way computers link together to share data, has always been an integral part of computing. Without a means to load balance traffic across a network, a computer has little use in daily life. Networking enables the internet and the SaaS-based applications that come with it.

What is a load balancer?

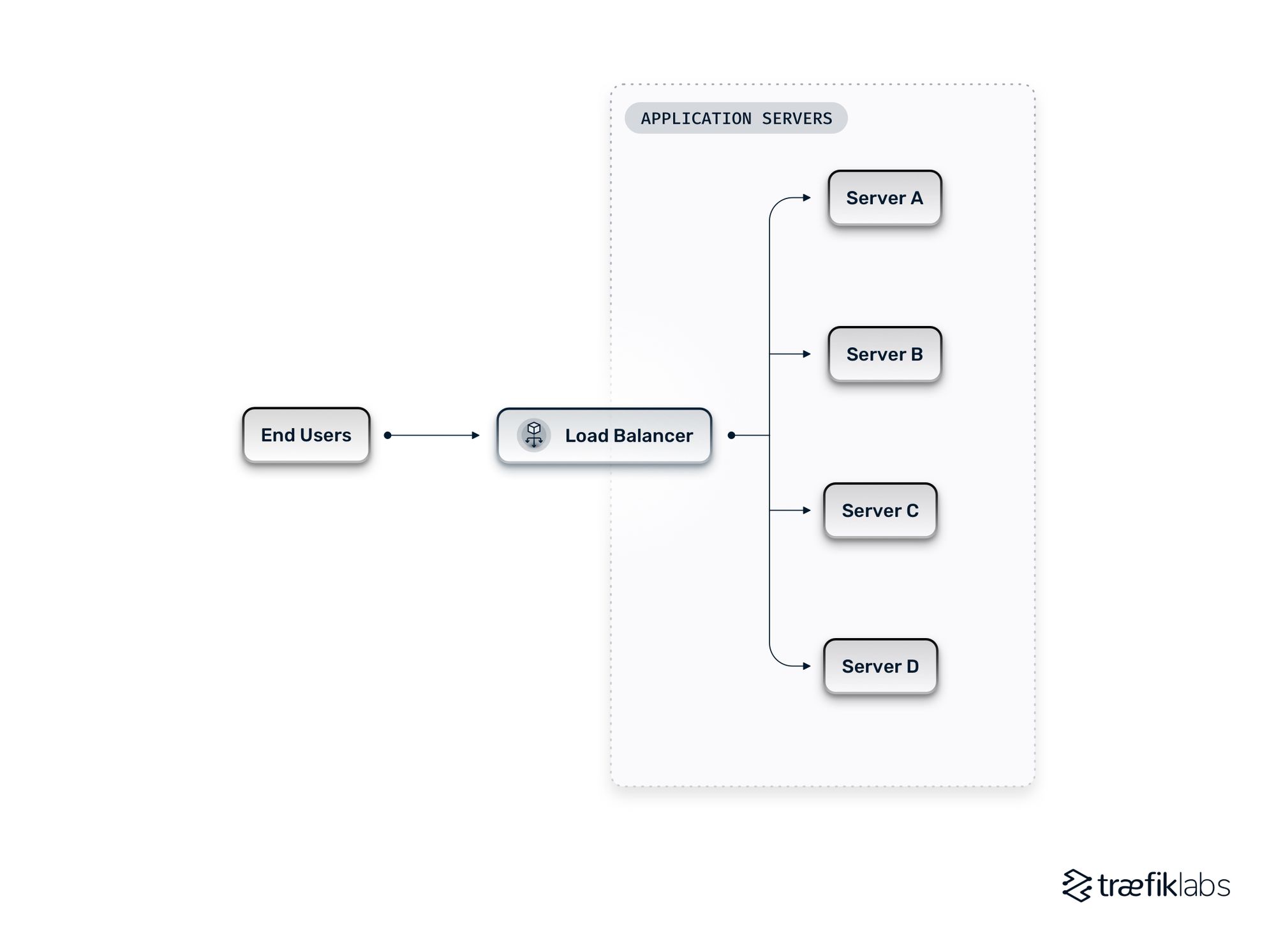

A load balancer is a mechanism in both cloud and on-premises networking architectures that calculates how to distribute traffic across multiple servers, applications, services, or clusters and defines to which instance a given request will travel. It manages the flow of information between servers (which could be physical or virtualized, and in on-prem or cloud environments) and endpoint devices (which could be a smartphone, laptop, or any IoT device).

Load balancers form a crucial part of any network, and distributed systems cannot function without them. Load balancers create balance by ensuring each server receives an appropriate amount of load from end-users. They prevent some servers from bearing too much load and failing or slowing the application as a result, while other servers receive too little or no load. Some load balancers can perform health checks on servers to check the current and maximum capacity of a server, using this information to distribute traffic fairly between servers. Effective load balancers optimize application performance and availability.

Having a load balancer in our network has significant benefits:

- Reduces downtime: Incoming requests are processed by more than one backend server, which helps us to build high available environments and less prone to failure of one server

- Scalability: Having more backend servers allows us to handle more load

- Flexibility: We can implement more advanced techniques to distribute network traffic and design more reliable environments .

Layer 4 vs. layer 7 load balancing

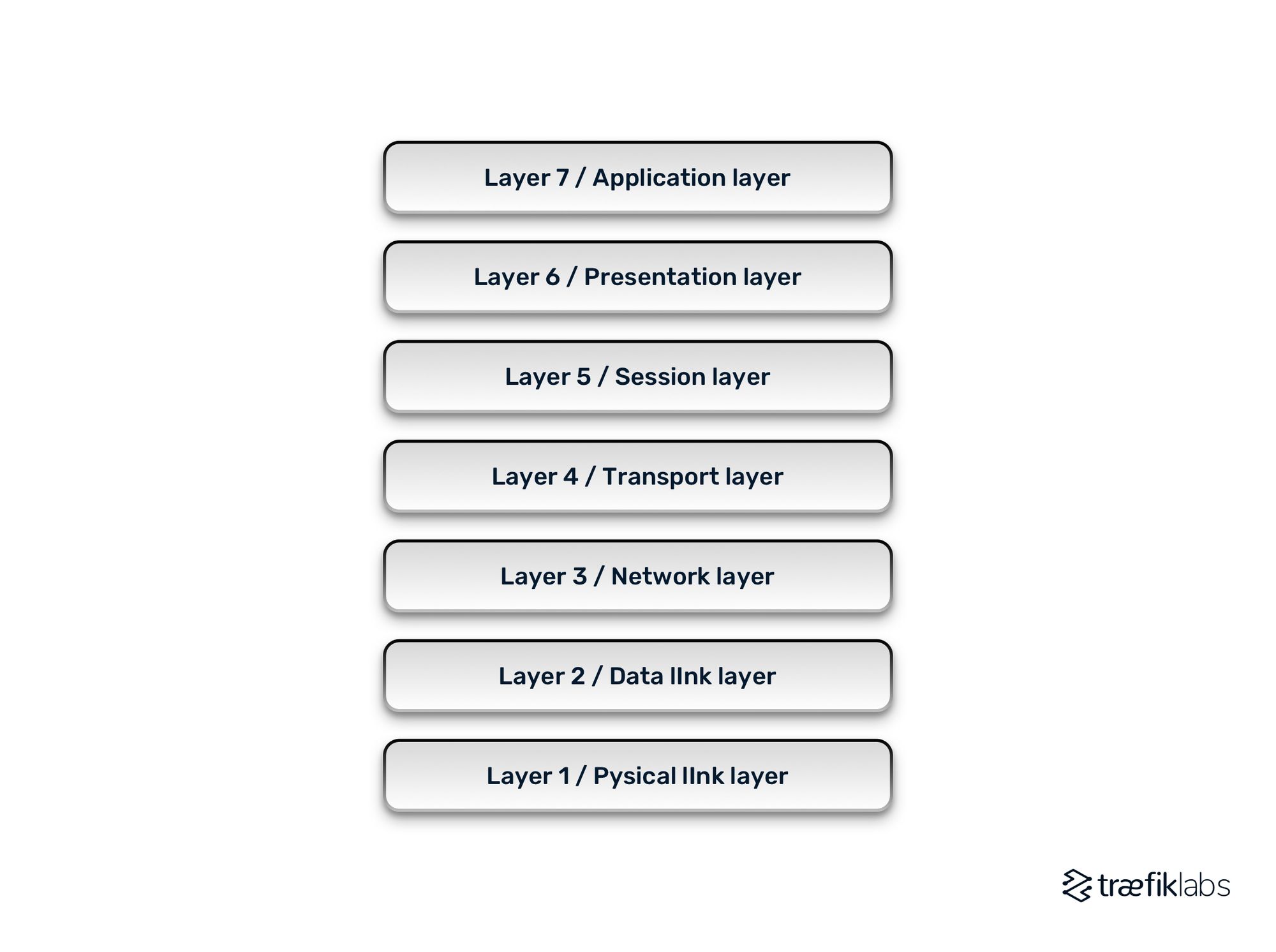

Load balancing has its roots in the 1990s when hardware appliances distributed traffic across networks. As information technology has become increasingly virtualized, load balancers have progressed into software. Load balancing today exists in both the transport and application layers of the Open Systems Interconnection (OSI) model, which is a standardized reference for how applications communicate over a network.

Layer 4 Load Balancing

Layer 4 is the transport layer of a network, and its load balancers send network packets to and from servers based on the port and IP addresses of the clients sent in the connection. The client routes the connection to the load balancer, which analyzes and sends the connection to the correct backend server.

A layer 4 load balancer doesn’t have visibility into the content of the connection itself, which is often encrypted. The client cannot see which server a connection has been routed to. Layer 4 load balancers use Transmission Control Protocol (TCP) or User Datagram Protocol (UDP) packets to transmit connections. Both are conventions for simulating connections and handling packets.

Layer 7 load balancing

Layer 7 is the application layer in the OSI model, and it includes many different sorts of applications, such as HTTP, MQTT, SSH, FTP, SMTP, POP3, and IMAP. More often than not, layer 7 load balancers transport HTTP requests. What is special about layer 7 load balancers is that they have visibility into the actual payload of a request.

Layer 7 load balancers route network traffic in a much more sophisticated way than layer 4 load balancers, but they require far more CPU and can reduce performance and increase cost as a result. It’s up to you to evaluate which is better for your use case.

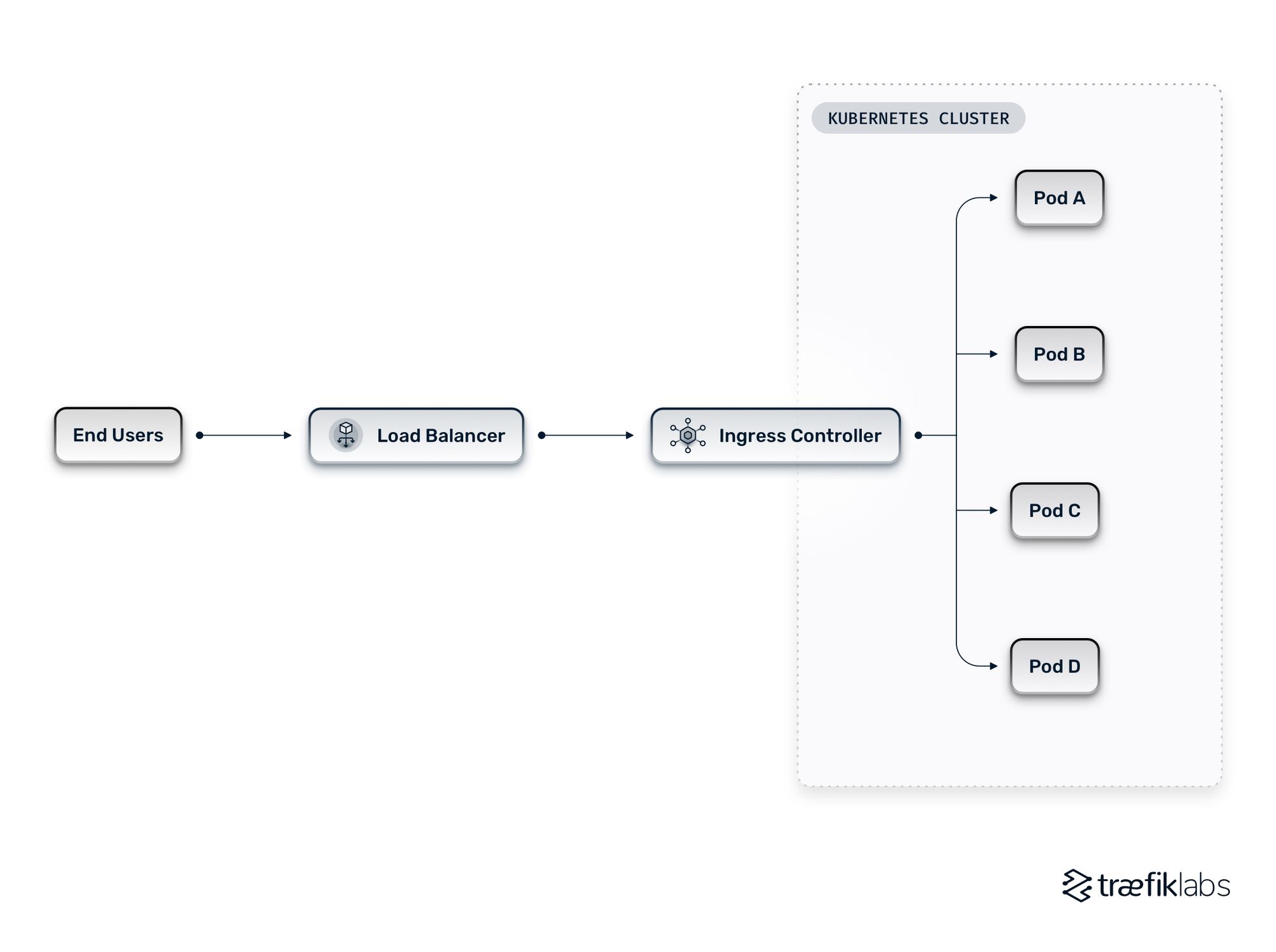

Advanced Kubernetes load balancing

Load balancing becomes particularly complicated when performed in containerized applications that consist of many separate components. Different systems will therefore layer themselves on top of one another to provide advanced load balancing rules.

In layer 7, Kubernetes has its own way of configuring load balancing between multiple backends, and this concept is ingress controllers. Layer 4 load balancing is generally taken care of by the public cloud provider, as each has its own load balancing system. Organizations managing environments on-prem take care of those load balancing capabilities themselves.

In Kubernetes, ingress controllers differentiate themselves by offering advanced load balancing capabilities. Instead of just transmitting data between the end user and the application, advanced load balancing capabilities allow you to perform rate limiting load balancing, which means you can make the most of your infrastructure and the resources it consumes.

What is rate limiting?

Rate limiting is an advanced form of traffic shaping, which is essentially how you control the flow and rate of traffic coming into your application from the internet. Rate limiting middleware allows you to match the flow of traffic to your infrastructure’s capacity. This carries two big advantages.

Firstly, rate limiting allows you to make sure your infrastructure never becomes overloaded with requests and lags before failing. Without rate limiting, traffic entering your application would line up in a queue and create congestion in your reverse proxy. Likewise, rate limiting is an essential part of any security strategy as it minimizes the effects of DDoS attacks on an infrastructure.

Similarly, rate limiting gives you the ability to make sure traffic is distributed evenly between different servers. It means you can make sure certain proxies don’t receive more traffic than they should compared to others and that your proxies don’t favor certain sources of traffic from the internet over others.

How does rate limiting work? At its simplest, rate limiting middleware lives within your reverse proxy, which sits in between the internet and your microservices. Traffic coming in from the internet is filtered through your rate limiting middleware. If your services are receiving more traffic than they can healthily accommodate, your rate limiting middleware will bounce all incoming requests back to their source. Your rate limiting middleware will also make sure the traffic being filtered out is distributed well amongst your many services.

Let’s illustrate an example of rate limiting. You can configure your middleware to allow, for example, ten requests per second with a maximum burst of fifty requests. When your middleware is holding fifty requests, it will automatically bounce any new requests. Likewise, you can configure sources to say, for example, ten requests can be received from Source A and ten from Source B.

What are other examples of advanced load balancing?

Rate limiting is an example of a technique that accelerates advanced Kubernetes architectures. There are also advanced load balancing mechanisms that ingress controllers enable. Some of them include:

- Weighted load balancing: Weighted load balancers allow users to define a weight for each server and rebalance traffic when a given server becomes overly crowded. It gives users a fair amount of control at any given moment.

- Round robin load balancing: Round robin load balancers rotate which server a request will be routed to. Traffic is directed to Server A, then Server B, and then Server C before repeating the cycle all over again. Round robin load balancers send the same amount of requests to each backend regardless of its health. This mechanism is ideal when servers match each other in capacity.

- Least connection load balancing: This mechanism directs traffic to the server with the fewest active connections in the back end. It is ideal when there are a large number of persistent connections in the traffic unevenly distributed between servers.

- Mirror load balancing: Traffic Mirroring copies inbound and outbound traffic from the network interfaces that are attached to your instances.

This list includes some of the most popular load balancing mechanisms, but there are many more out there. Many applications will use several load balancing mechanisms at once.

Your choice of ingress controller will affect your load balancing capabilities. Traefik Proxy automates load balancing, including a powerful set of middlewares for both HTTP and TCP load balancing. It includes weighted round robin, round robin, and mirror mechanisms. For organizations with complex distributed systems, Traefik Enterprise includes advanced features. Load balancers are integral to any computer networking strategy. If you want to see Traefik Enterprise in action, don’t hesitate to contact us and book a demo to discover how Traefik Enterprise can work for you.

References and further reading

- Load Balancing High Availability Clusters in Bare Metal Environments with Traefik Proxy

- Load Balancing High Availability Clusters with Traefik

- Traefik: Canary Deployments With Weighted Load Balancing

- Load Balancing with MetalLB on Bare Metal Kubernetes

- Rate Limiting on Kubernetes Applications with Traefik Proxy and Codefresh