What is a Kubernetes Ingress Controller, and How is it Different from a Kubernetes Ingress?

Kubernetes’s adoption continues to skyrocket. As organizations create more and more clusters, Kubernetes Ingresses and ingress controllers become more critical. Let’s look at what they are and why they are such a crucial concept in Kubernetes.

What is a Kubernetes Ingress?

While Pods within a Kubernetes cluster can easily communicate between themselves, they are not by default accessible to external networks and traffic. A Kubernetes Ingress is an API object that shows how traffic from the internet should reach internal Kubernetes cluster Services that send requests to groups of Pods. The Ingress itself has no power. It is a configuration request for the ingress controller that allows the user to define how external clients are routed to a cluster’s internal Services. The ingress controller hears this request and adjusts its configuration to do what the user asks.

Every ingress controller has its configuration syntax, and the beauty of the Ingress resource is that it acts as an abstraction layer on top of those specifics. A user doesn’t need to know which ingress controller is in a cluster. The same Ingress resource will have the same result in every Kubernetes cluster with any ingress controller.

To say it another way, Kubernetes Ingresses define how the cluster routes layer 7 traffic for HTTP/HTTPS requests. They also come with a slew of additional features. For example, Ingresses make it possible to define the TLS configuration used for TLS termination.

What is a Kubernetes ingress controller?

An ingress controller acts as a reverse proxy and load balancer. It implements a Kubernetes Ingress. The ingress controller adds a layer of abstraction to traffic routing, accepting traffic from outside the Kubernetes platform and load balancing it to Pods running inside the platform. It converts configurations from Ingress resources into routing rules that reverse proxies can recognize and implement.

How do Kubernetes Ingresses and ingress controllers work?

A Kubernetes ingress controller follows the same pattern as a deployment controller (or any other Kubernetes controller, for that matter). An ingress controller acts by tracking Ingress resources inside a cluster, transforming them into a state requested by the user.

A Kubernetes cluster can have multiple ingress controllers. When the user creates, updates, or deletes an Ingress, the ingress controller receives the event and reads the configuration from the Ingress specifications and annotations. The ingress controller then converts the YAML or JSON configuration expressed by the user into something the reverse proxy can understand and bring into the cluster.

What are the benefits of an ingress controller compared to its alternatives?

Let’s take a step back and discuss the core problem Kubernetes ingress controllers address.

Cloud native applications are composed of multiple Kubernetes Pods, each of which has its own IP address that is easily reachable from within the cluster using Kubernetes Service resources. Services are, by default, not exposed to the internet as they are not reachable outside a Kubernetes cluster.

Different kinds of Services can become reachable. Each provides a different way to expose itself and is ideal in different scenarios.

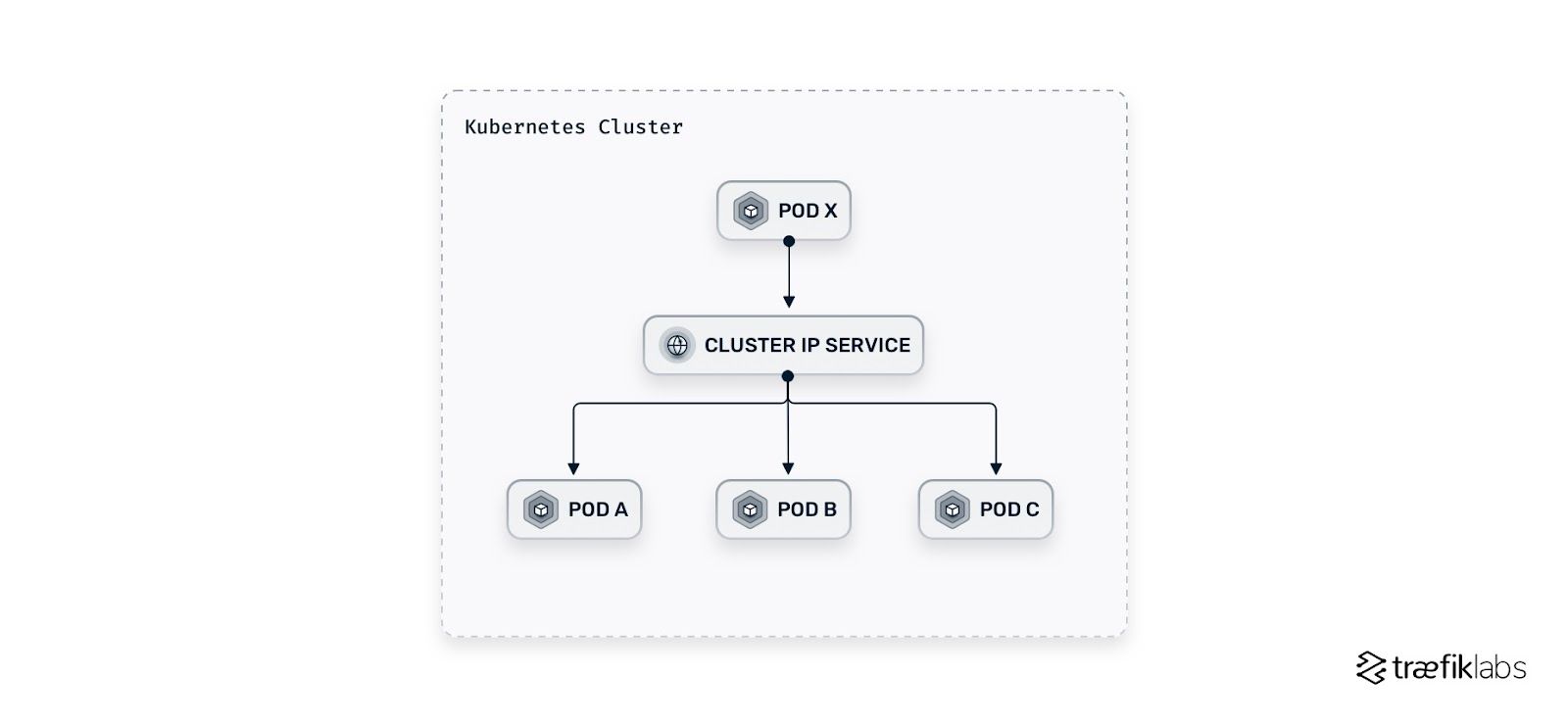

ClusterIP Service

A ClusterIP Service is exposed on an internal cluster IP and is reachable from within the cluster. It is the default setting in Kubernetes.

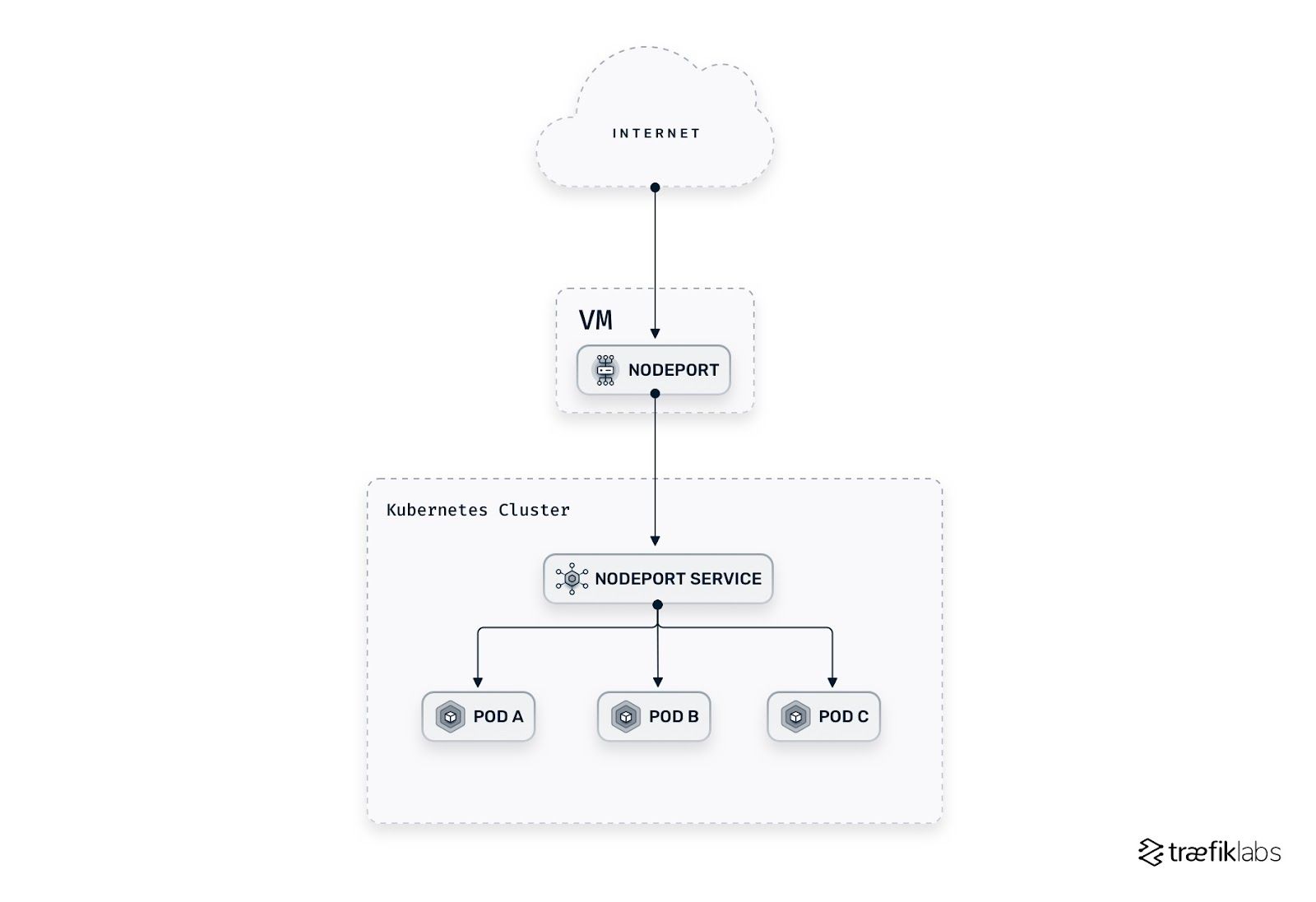

NodePort Service

A NodePort Service asks Kubernetes to open a static port in every cluster node on a high port between 30,000 and 32,767 (by default). It is exposed on the IP of each node and is automatically routed to a ClusterIP Service that it creates.

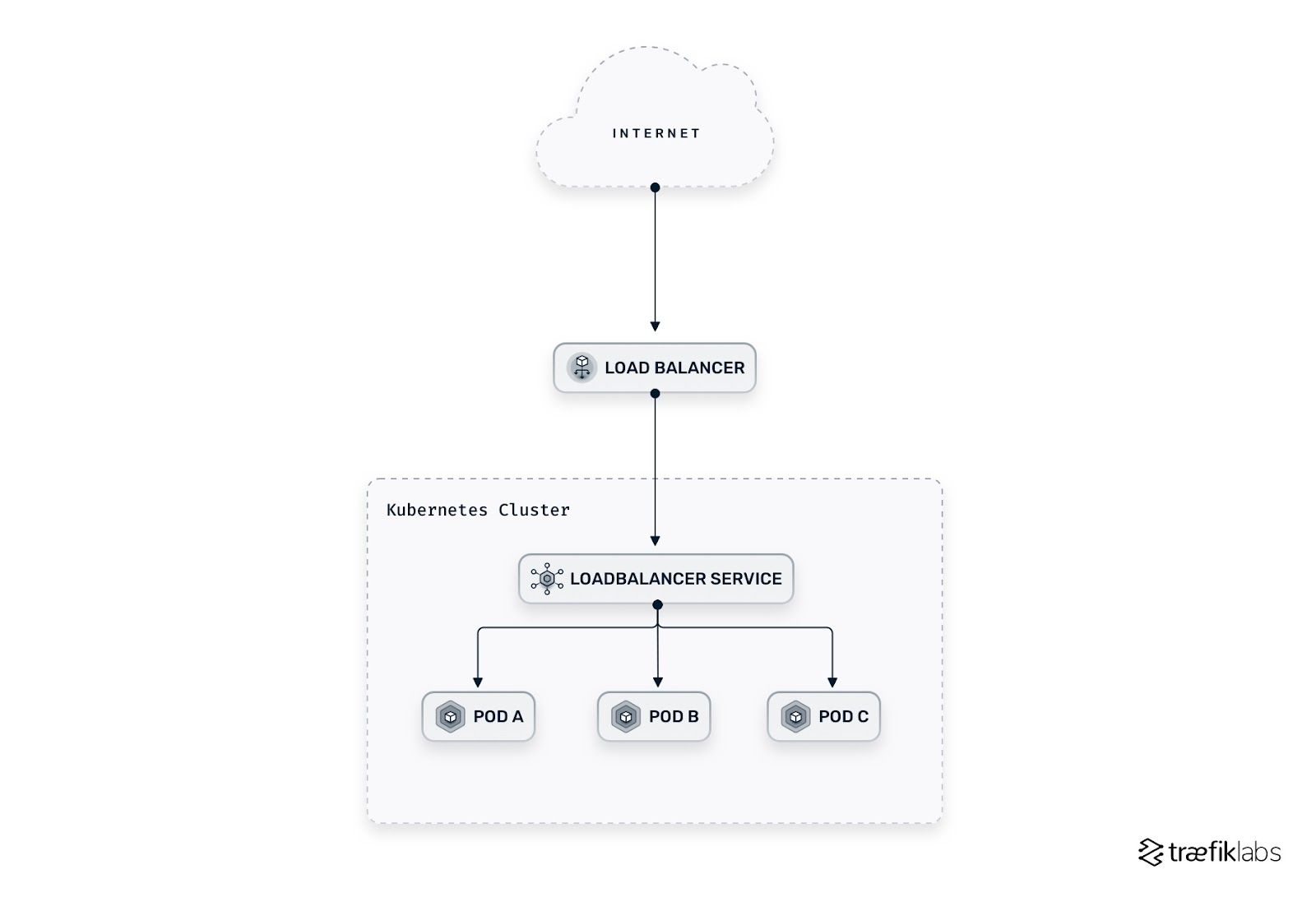

LoadBalancer Service

A load balancer (LB) exposes the Service externally using a cloud provider’s load balancer. Both NodePort and ClusterIP Services are automatically created, and the load balancer routes traffic to them. This option is expensive as it requires each Service to have its own IP address and cloud provider’s load balancer. The exception is if you are using MetalLB or kube-vip for an on-premise environment, or any on-prem hardware LB with a cloud controller (such as F5), which wouldn’t cost anything at all.

Ingress and ingress controller

An Ingress is not a type of Service but a smart L7 router that routes configurations and is often exposed through a load balancer Service. It relies on an ingress controller and allows you to mutualize application hosting, making it less expensive than giving each Service a cloud load balancer. An Ingress expresses routing in one place and in one component. This is a stable option, as you have only one IP reachable from the internet. Traffic directed to that IP is then routed by the ingress controller to the correct Service.

There are many reasons why it is ideal to use an Ingress and an ingress controller over LoadBalancer Services. As ingress controllers collect Ingress resources behind a common IP, they are less expensive than the alternatives. Ultimately, they simplify the exposition of Services with routing rules.

Ingress controllers can also provide features such as ACME (automatic certificate management environment), middleware, and load balancing. Some ingress controllers also come with features that improve security. You can define TLS configurations and TLS security. Ingress controllers can sometimes also improve the observability of a platform, granting an added level of control.

What are the limitations of an ingress controller?

Now that we’ve discussed the benefits of ingress controllers, it’s prudent also to discuss the limitations.

Ingress controllers only cover L7 traffic, while ingresses route HTTP and HTTPS traffic. It is not possible to route TCP and UDP traffic using an Ingress, even if the ingress controller supports L4 traffic.

Every ingress controller supports a set of annotations that configure specific features supported by the software. For example, Traefik users can use an annotation to add a Middleware to an Ingress, even if the Ingress specification does not support it.

Ingresses are also single namespaced, which means that an Ingress inside a Kubernetes Namespace can only reference a Service inside the same Namespace. Cross-namespacing is not possible with Kubernetes Ingresses. Kubernetes has created the Gateway API specification that can act as the Ingress between Namespaces — a solution to this limitation.

Summary

When used correctly, ingress controllers can dramatically simplify the operations of Kubernetes clusters while improving security, observability, performance, and resilience. They are a powerful tool in any technology stack, providing far more than the core functionality of bringing external traffic into a Kubernetes cluster.

Not all ingress controllers are created equal — each comes with its benefits and ideal use cases. Your choice of ingress controller matters. When deciding which ingress controller to choose, start with your key requirements.

If you manage low-volume production environments and are not planning on scaling, you may not need more than the standard functions (load balancing, traffic control, traffic splitting, etc.). Tech bloat is real and can negatively impact your application in performance and management complexity. A simple ingress controller could be ideal for your use case.

If your application operates in a distributed, multi-cloud environment, you may need a performant networking solution. Cloud native networking solutions, such as Traefik Enterprise, can unite Ingress control with API management and service mesh.

Correctly identifying the challenges you are solving will help you make the correct choice of Kubernetes ingress controller.

References and further reading

- API Gateway

- Reverse Proxy 101

- 13 Considerations When Selecting an Ingress Controller for Kubernetes

- Combining Ingress Controllers and External Load Balancers with Kubernetes

- Connecting Users to Applications with Kubernetes Ingress Controllers

- Observing Kubernetes Ingress Traffic Using Metrics

- Leveraging Your Ingress Controller to Easily Migrate to Kubernetes

- Kubernetes Ingress & Service API Demystified