Blue-Green, Canary, and Other Kubernetes Deployment Strategies

Kubernetes continues to grow in adoption, and today’s applications are scaling rapidly. Rather than increasing the size of existing clusters, DevOps teams are creating more clusters across diverse, distributed applications. They are deploying constantly and aggressively, as the rate of software releases only accelerates.

Your chosen Deployment strategy will significantly affect the success of your Deployments. Before we compare the Kubernetes Deployment strategies out there, let’s cover some key terms.

What is a Kubernetes Deployment and Deployment Strategy?

A Kubernetes Deployment provides declarative changes or upgrades for Pods and ReplicaSets. Deployments are not to be confused with releases; they are related but not identical. While a Kubernetes Deployment is the practice of installing a new version of software on any environment (be it bare metal, VM, or anything), a release describes the routing of requests to a new Deployment. A release is a new feature that ultimately reaches your customer base. You can have many Deployments on a given Kubernetes cluster, but you can only route incoming network traffic to one Deployment.

A Kubernetes Deployment strategy describes how you publicly release new features or updates, including how you route users to your new release. Good Deployments take little time, are transparent and secure, and have no impact whatsoever on the end-user. In practice, however, Deployments are often quite messy. Bad Deployments can lead to security vulnerabilities, lags, and outages for the end-user, even expiring their sessions.

An organization's Deployment strategy dramatically affects its release of new software updates with velocity. There are three major types of Kubernetes Deployment strategies to choose between: blue-green, rolling, and canary.

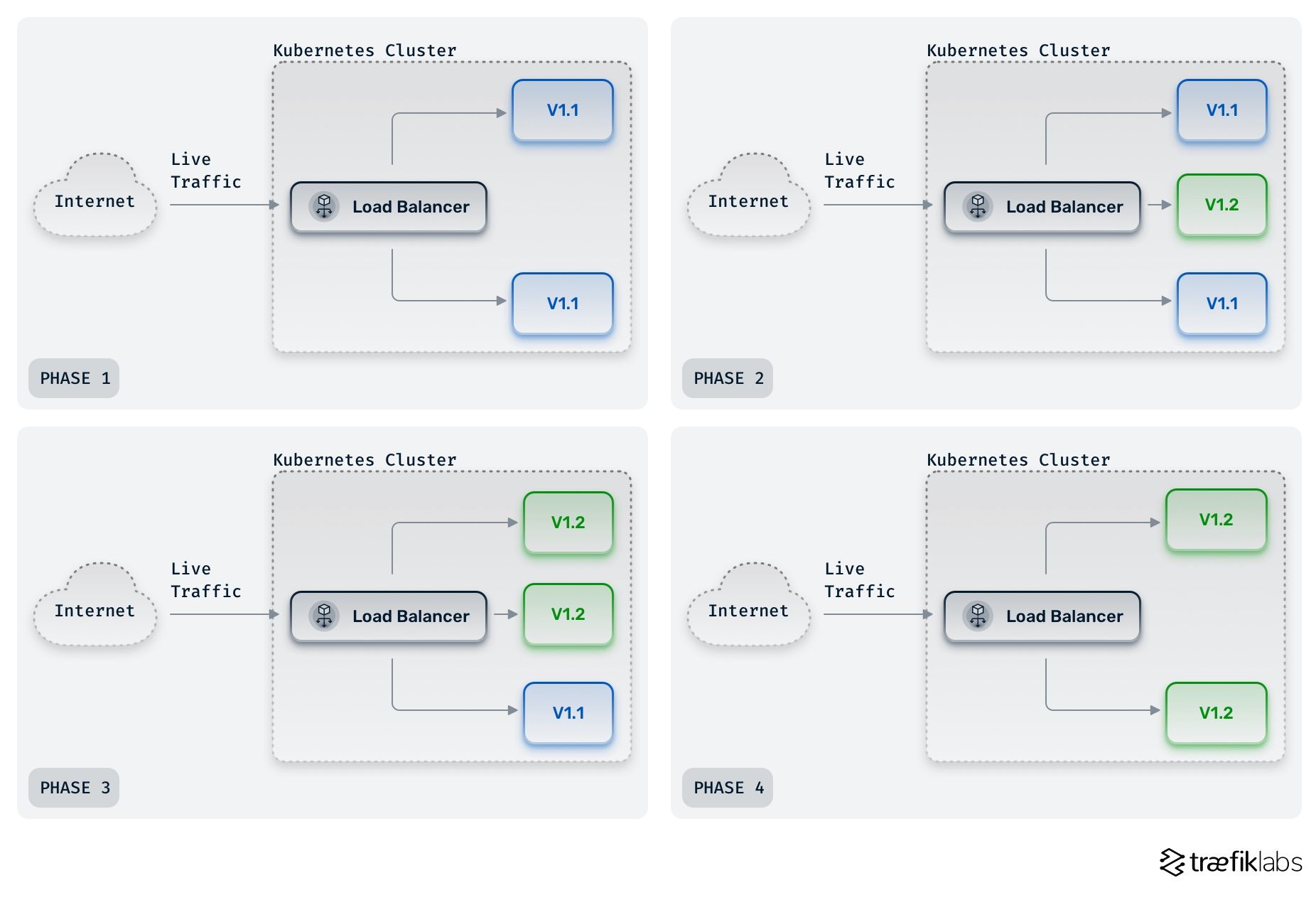

What is a blue-green Deployment?

Blue-green Deployments are called so because they ask you to maintain two production environments: a blue environment containing the current code and a green environment that contains the application with the updated code.

To release a new version of your application with this strategy, you must maintain all live traffic in a blue environment while deploying code to a green environment. Test the new update in the green environment and, once you’re satisfied with the results, migrate all live traffic to the green environment and execute the actual release.

Blue-green Deployment strategies were much more difficult in the past when bare metal was common. Today, Kubernetes has made creating new environments containing applications much easier.

The main advantage of a blue-green Deployment is the instant rollout/rollback. You avoid versioning issues as you change the entire cluster state at once. This Deployment strategy is fast and straightforward.

A disadvantage of this approach is cost. As you must maintain two environments, each big enough for production, your infrastructure costs double.

The major disadvantage of the blue-green Deployment strategy is its inherent risk. Development environments are often slower and simpler than production environments. Even the most rigorous tests in blue environments can overlook problems that will arise when the release goes live. An outage or issue will have widespread implications as all users are affected in blue-green Deployments.

Rollbacks are also more complicated as all users must be switched back to the blue environment. Blue-green Deployments are easy and fast, but they are also more expensive and risky.

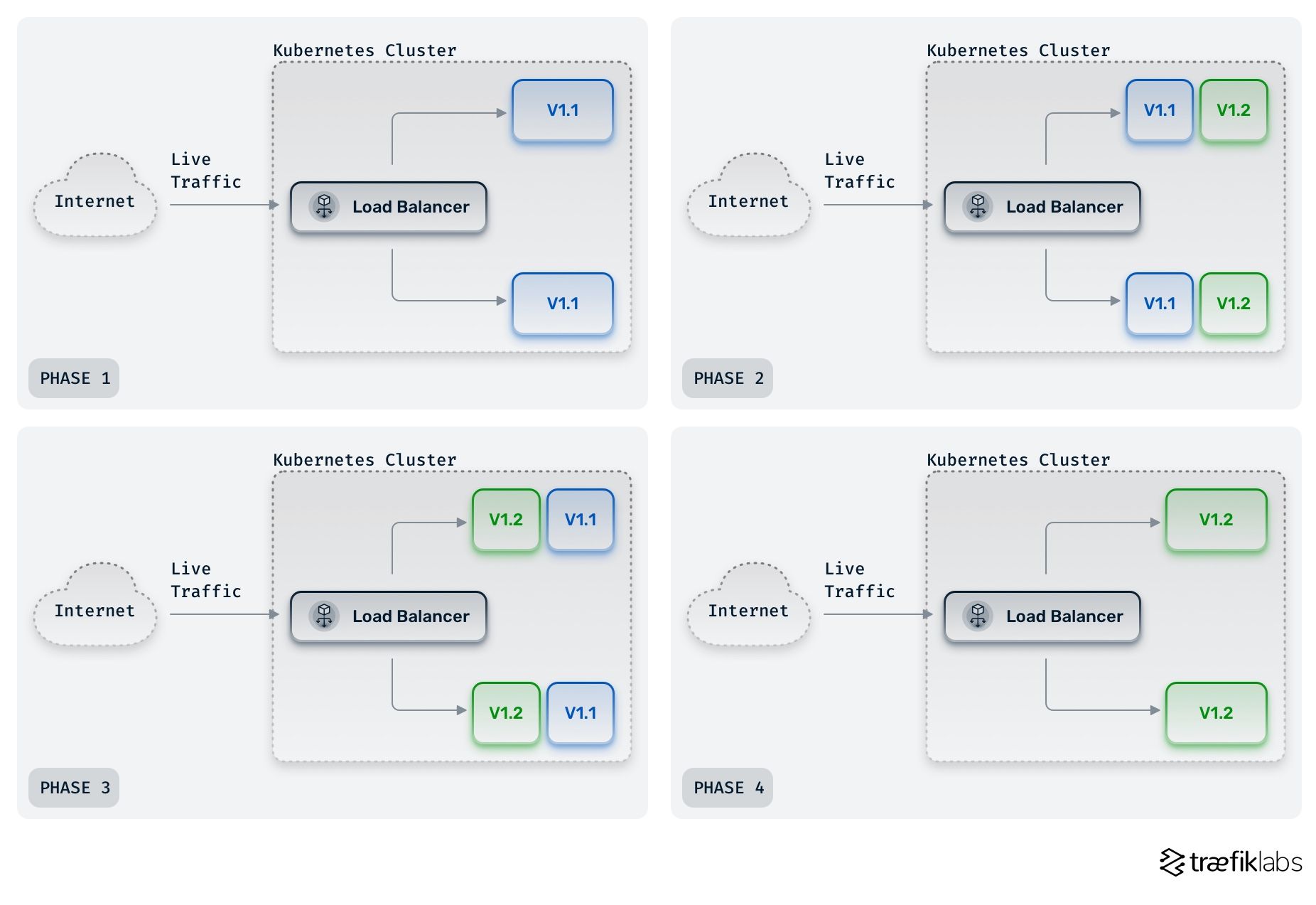

What is a rolling Deployment?

Rolling Deployments are the default in Kubernetes, and you will use this strategy unless you change the Deployment or Deployment resource in your cluster. Rolling Deployments begin by moving only a portion of all users (10%, for example) to the new application. The application is observed to ensure factors, such as HTTP responses, metrics, latency, and response time run smoothly.

If any problem arises, the Deployment can be rolled back very quickly with only a few users affected. If your application behaves well, another set of users is moved to the new application and then another. Users are continually migrated to the new application in batches until all users are migrated.

Rolling Deployments are a progressive strategy because users migrate gradually to the new version. They take more time than blue-green Deployments but are far safer. You can roll back releases quickly and without impacting as many users. Rolling Deployments are also less expensive than blue-green Deployments as you don’t have to maintain two production-ready environments of the same size at the same time.

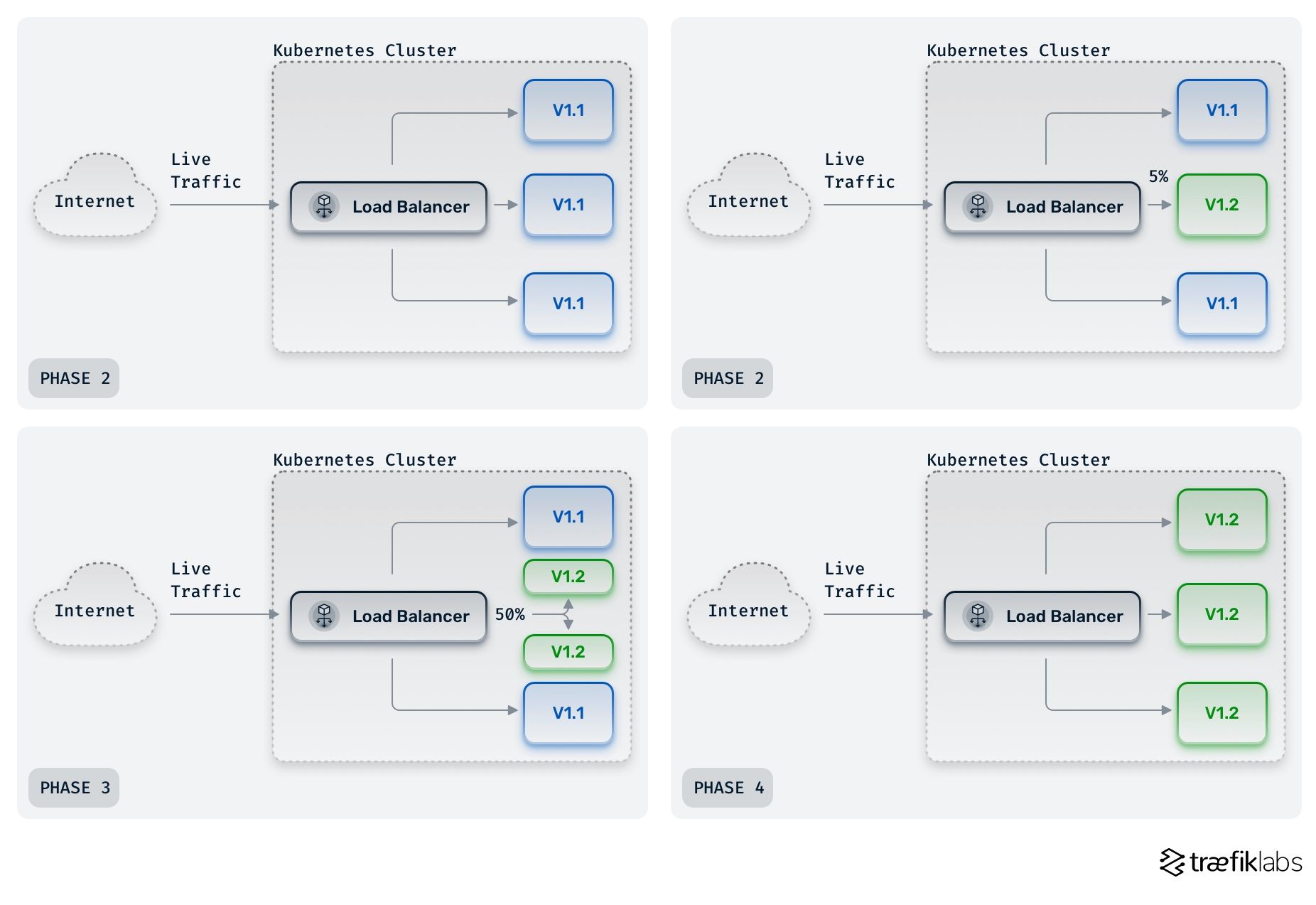

What is a canary Deployment?

Canary Deployments are similar to rolling Deployments, but they are more advanced. They are also progressive, as the release is delivered to users incrementally. However, canary Deployments allow you to target which users will first receive access to the new version of an application. With rolling Deployments, it’s random.

Canary Deployments give DevOps teams access to early feedback and bug identification. The targeted subset of users can spot weaknesses to fix before the release rolls out to other users. Because of this, canary Deployments give you more control over feature releases.

Canary Deployments are a strategic asset for a business, as they provide the ability to decide which segments of a customer base will try out a new release first. For example, the new release will first roll out only to end-users in Poland or to end-users that have engaged with the software. It's up to the business to decide.

This Deployment strategy is named after the canaries that miners brought into coal mines to detect carbon monoxide. As canaries have sensitive lungs, they would die if carbon monoxide levels got too high. Their cries would alert the coal miners, giving them time to escape. Similarly, with canary Deployments, the targeted subset of users alerts the DevOps team of vulnerabilities in the application.

Canary Deployments carry the most benefits of any Deployment strategy and are well-suited to any application. They are also the most complex and advanced. Kubernetes beginners may struggle to implement them well, but DevOps practitioners that take the time to master canary Deployments will deploy more efficiently while giving the business a strategic advantage.

Best practices of canary Deployments

Deployment strategies don’t exist in a Kubernetes vacuum, and a solid cloud native technology stack is crucial for the success of any Deployment.

Performant tools for monitoring and observability will allow you to measure how your application behaves and affects end-users. Prometheus and Grafana are the most commonly-used open source tools for monitoring and observability. These tools will show you whether response times are within the threshold defined, so you can confidently move forward deploying to the following subset of users.

An organization's Site Reliability Engineering (SRE) team will define the Deployment strategies the DevOps teams leverage. They will also determine an accepted Deployment's metrics, thresholds, and service level objective (SLO).

As Deployment strategies describe how you route users to a new version of the application, your networking tools will also impact the success of your Deployment strategy.

Traefik Proxy and Enterprise can help you simplify and execute blue green, rolling, and even canary Deployments daily. Using a Traefik Service (an abstraction layer running on top of a Kubernetes Service), you can create Version A and Version B of an application. You can manually weigh certain users to Version A and others to Version B with weighted round robins, splitting traffic between two Deployments you've created on a Kubernetes cluster.

Flagger is an open source tool from Weaveworks that you can use to automate this process. It integrates with Traefik and Prometheus to check metrics and provides automation tools for changing weights.

Your Deployment strategy is of crucial importance, as it defines the risk, efficiency, speed, and overall success of your Deployment. Whether you opt for blue-green, rolling, or canary Deployments, a cloud native proxy and load balancer like Traefik Proxy can help. For advanced Deployment strategies, a unified cloud native networking solution like Traefik Enterprise that brings API management, ingress control, and service mesh into one simple control plane will help you bring your Deployment strategy to the next level.