K3s Explained: What is it and How Is It Different From Stock Kubernetes (K8s)?

Businesses around the world are driven by the demand for scalable and reliable services. Kubernetes evolved from a system called Borg that Google used internally for years before they shared it with the public. With Google’s near-mythical history of running massive data centers for fast responses to search queries, it took almost no time for Kubernetes to establish itself as the solution that everyone wanted to use.

Kubernetes was designed to accommodate large configurations and make them scalable and resilient. But stock Kubernetes is heavy, complex, difficult to manage, and comes with a steep learning curve. Almost immediately after its release, people began changing their applications to fit the constraints of Kubernetes instead of using Kubernetes as a tool with a specific purpose.

Unused resources result in longer installation times, a larger attack surface, and additional complexity. Best practices for building containers state that the container should only contain the resources that it needs to perform its function, so why should the container orchestrator be any different? By getting rid of unnecessary components, K3s manages to solve many of the issues developers face in stock Kubernetes.

What is K3s?

K3s is a lightweight Kubernetes distribution created by Rancher Labs, and it is fully certified by the Cloud Native Computing Foundation (CNCF). K3s is highly available and production-ready. It has a very small binary size and very low resource requirements.

In simple terms, K3s is Kubernetes with bloat stripped out and a different backing datastore. That said, it is important to note that K3s is not a fork, as it doesn’t change any of the core Kubernetes functionalities and remains close to stock Kubernetes.

The birth of K3s

While building Rio (no longer active), Darren Shepherd from Rancher Labs (now part of SUSE) found himself frustrated by how long it took to spin up a new Kubernetes cluster for every test run. He knew that if he could bring clusters online faster, he could code faster, and if he could code faster, he could ship features faster.

Rancher already had the Rancher Kubernetes Engine (RKE), so Darren was intimately familiar with the Kubernetes source code. He started picking it apart, removing all of the extraneous content that wasn’t necessary to run workloads in his environment. This included all out-of-tree and alpha resources, storage drivers, and the requirement for etcd as the backend datastore.

He created a single binary solution that unpacked to include containerd, the networking stack, the control plane (server) and worker (agent) components, SQLite instead of etcd, a service load balancer, a storage class for local storage, and manifests that pulled down and installed other core components like CoreDNS and Traefik Proxy as the ingress controller.

The result was a Kubernetes distribution that clocked in at under 50MB and that ran all of Kubernetes in under 512MB of RAM. This enabled Kubernetes to run in resource-constrained environments like IoT and the edge. For the first time, someone could run Kubernetes on a system with only one GB of RAM and still have memory available for the workloads.

Rancher K3s was born, and at the time, no one had any idea that it would be so popular.

K3s and the CNCF

The Cloud Native Computing Foundation (CNCF) is the governing body that controls Kubernetes and many other open source projects. They certify Kubernetes distributions as conformant, which means that a workload that runs in one certified distribution will run in another certified distribution.

Certification by the CNCF opened the door for K3s installations in environments like wind farms, satellites, airplanes, vehicles, and boats. Interest picked up from governments who wanted to use K3s in air-gapped environments and places that would benefit from its minimal attack surface.

Realizing that what started as an internal project, with a limited scope was now in the hands of the community, Rancher donated K3s to the CNCF. Rancher K3s shortened its name to K3s, and it is the only Kubernetes distribution in the CNCF landscape that is owned by the CNCF.

What does the name K3s mean?

K8s comes from “Kubernetes” where there are eight characters between the K and the S. This form of abbreviation comes from the suite of programming libraries for internationalization, which is a word that developers simply got tired of typing. Internationalization became “i18n,” and that’s how Kubernetes became “K8s.”

If you follow the same shortening formula, that becomes “K3s,” and, since software is full of recursive humor, that seemed like a great name for a solution that’s simply an optimized and efficient version of its parent. There is no long form of K3s, nor is there an official pronunciation.

The advantages of K3s

K3s is a single binary that is easy to install and configure, and addresses a number of pain points introduced in stock Kubernetes.

TL;DR

- Small in size — K3s is less than 100MB and this is, possibly, its biggest advantage.

- Lightweight — The binary containing the non-containerized components is smaller than K8s.

- Fast deployment — You can use a single command to install and deploy K3s, and it will take you less than 30 seconds to do so.

- Simplified — Thanks to the self-contained single binary package.

- Supports the automation required in Continuous Integration — K3s helps you automate the integration of multiple code contributions into a single project.

- Smaller attack surface — Thanks to its small size and reduced amount of dependencies.

- Batteries included — CRI, CNI, service load balancer, and ingress controller are included.

- Easy to update — Thanks to its reduced dependencies.

- Easy to deploy remotely — can be bootstrapped with manifests to install after K3s comes online.

- Great for resource-constrained environments — K3s is the better choice for IoT and edge computing.

To better understand how all this is achieved, let’s take a look at the main characteristics of K3s.

A single binary with batteries included

K3s is a single binary that is easy to install and configure. The binary weighs in at between 50 and 100MB (depending on the release), and it unpacks to include all of the necessary components for running Kubernetes on control plane and worker nodes. This includes containerd as the CRI, Flannel for the CNI, SQLite for the datastore, and manifests to install critical resources like CoreDNS and Traefik Proxy as the ingress controller. It contains a service load balancer that connects Kubernetes Services to the host IP, making it suitable for single-node clusters.

Control plane nodes run all of Kubernetes in under 512MB of RAM, and worker nodes run their components in under 50MB of RAM.

Single-process simplicity

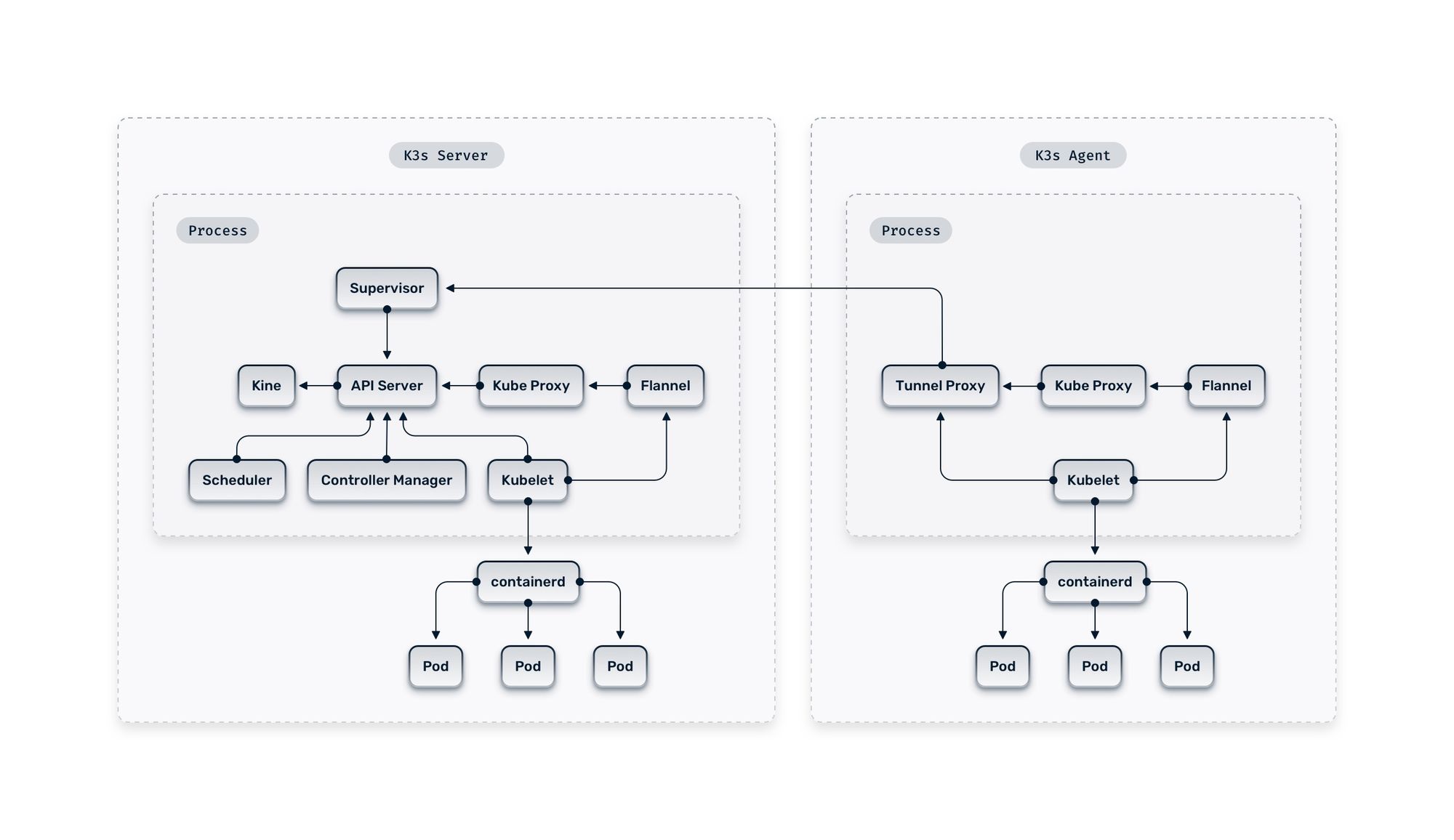

Unlike traditional Kubernetes, which runs its components in different processes, K3s runs the control plane, kubelet, and kube-proxy in a single Server or Agent process, with containerd handling the container lifecycle functions.

Flexibility

K3s is configured at install time via command-line arguments or environment variables. The same binary can become a control plane node or join an existing cluster as a worker. For environments with sufficient resources, it can replace SQLite with an embedded etcd cluster, or it can use an external etcd cluster or an RDBMS solution like MySQL, MariaDB, or Postgres.

K3s separates the runtime from the workloads, so the entire K3s subsystem can be stopped and started without affecting running operations. This makes it easy to upgrade K3s by replacing the binary and restarting the process or to reconfigure it by altering the flags in the startup file and restarting it.

Although K3s ships with containerd, it can forego that installation and use an existing Docker installation instead. All of the embedded K3s components can be switched off, giving the user the flexibility to install their own ingress controller, DNS server, and CNI.

Delivers on the ubiquity promise of Kubernetes

K3s is so small and runs in so many places that organizations can now run K3s from end to end. Developers can use K3s directly, or through an embedded solution like K3d or Rancher Desktop, without needing to allocate multiple cores and gigabytes of RAM on their local workstations.

CI/CD systems can spin up K3s clusters inside of Docker with K3d and use them for testing applications before approving them for production. Production environments can run full K3s clusters in an HA configuration, with multiple, isolated control and data plane nodes, and a pool of worker nodes to deliver the application to the users.

The consistency of the environments assures that what the developer creates will run the same in production, bringing the pledge of consistent container operation up to the clusters themselves.

Faster than K8s

In a world where Kubernetes takes 10 minutes or more to install, K3s can install on any Linux system and deliver a working cluster in under a minute. Its lightweight architecture also makes it faster than stock Kubernetes for the workloads that it runs.

Runs in places that K8s cannot

The tiny footprint of K3s makes it possible to orchestrate and run container workloads in places that Kubernetes could never reach before. Many of these locations use single-node clusters on hardened equipment designed to operate in harsh environments with limited or intermittent connectivity.

K3s includes a Helm controller that will install Helm packages via a HelmChart manifest. Any Kubernetes manifest can be placed in a directory on the control plane node before or after installation, and those resources will be installed into the cluster. The combination of these features makes it possible to bootstrap clusters so that they come up with every application they need, ready to go from the moment of installation with no external intervention.

K3s has been used successfully in satellites, airplanes, submarines, vehicles, wind farms, retail locations, smart cities, and other places where running Kubernetes wouldn’t normally be possible.

K3s vs. K8s

The answer to K3s vs. K8s is in fact that this is not an entirely valid comparison. K3s is a Kubernetes distribution, like RKE. The real difference between K3s and stock Kubernetes is that K3s was designed to have a smaller memory footprint and special characteristics that fit certain environments like edge computing or IoT.

So, the question is not about what are the differences between K3s and K8s but rather when and for which environment you should choose one over the other.

Note: In this context, stock means unchanged. K3s has changed Kubernetes, so while it's still 100% upstream Kubernetes, it isn't stock Kubernetes. Upstream and stock are often interchangeable, but upstream implies refined and stock implies the bare minimum with no change that would improve the environment in which it runs.

When should you choose K3s?

The decision to use K3s over K8s will come down to the requirements of the project and the resources that you have available. If you want to run Kubernetes on Arm hardware like a Raspberry Pi, then K3s will give you the complete functionality of Kubernetes while leaving more of the CPU and RAM available for your workloads.

On the other hand, if you want to run Kubernetes on a cloud instance with 24 CPU cores and 128GB of RAM, then K3s offers no real benefit over a Kubernetes distribution like RKE or RKE2.

If you run Kubernetes on premises, and don’t need all of the cloud provider cruft, then K3s is an excellent solution. You can use it with an external RDBMS or an embedded or external etcd cluster, and it will perform faster than a stock distribution of Kubernetes in the same environment.

If you spin Kubernetes clusters up and down on a regular basis, for cloud bursting, running batch jobs, or continuous integration testing, then you’ll appreciate how quickly a K3s cluster comes online.

Conclusion

K3s created a stir when it came into the world, and it continues to meet the need for reliable Kubernetes in resource-constrained environments.

It’s neither less than, nor a fork of Kubernetes. It’s 100% upstream Kubernetes, optimized for a specific use case.

As the only Kubernetes distribution owned by the CNCF and the most popular lightweight Kubernetes distribution, it’s the right choice for the environments in which it excels.

References and further reading

- Master Traefik Proxy With K3s

- K3s Home

- Expose Traefik with K3s to the Internet

- What is a Kubernetes Ingress Controller, and How is it Different from a Kubernetes Ingress?

- Kubernetes for Cloud Native Application Networks

- Easy Ingress Management on the Edge with K3s Lightweight Kubernetes and Traefik

- Build a Lightweight Private Cloud with Harvester, K3s, and Traefik

- Top 5 Open Source Tools for Local Development with Kubernetes